Initialises the KSQL DB Client with the provided request::ClientBuilder. It displays the details of the streams and tables along with their unique name. What version of ksqlDB are you using? Apache Kafka is an Open-source and Distributed Stream Processing platform that stores and handles real-time messages or data. How would I modify a coffee plant to grow outside the tropics? Since ksqlDB is similar to SQL Language in syntax and query statements, it is straightforward even for non-technical folks to seamlessly process the Streaming Data in the Kafka Clusters. Making statements based on opinion; back them up with references or personal experience. Read more, Mutably borrows from an owned value. In this article, you will learn about ksqlDB and how it is used to perform data processing operations on Kafka data. Execute the following commons to inspect the newly created table. Want to take Hevo for a spin? It's exactly like querying a classic relational database.

Immutably borrows from an owned value. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. They both have the same On clicking on the CREATE-STREAM node from the overall topology, you can view the persistent query or statement used while creating the new stream called PAGEVIEWS_ENRICHED.. A query does not return results if no new messages are being written to the topic. It is a wholesome package that comprises all the features and functionalities to perform Data Querying Operations on Kafka data. rev2022.7.20.42634. With TABLEs, you can ask the value of a specific key at the current moment, instead of consuming a whole STREAM just to find the key you're looking for.

This method just makes the request to the execute statement endpoint and returns It has a dedicated set of Kafka Servers or Brokers distributed across Kafka Clusters to store and organize real-time messages. You can refer to the process of Kafka Topic creation, producing sample messages using the Datagen Source Connector, consuming Kafka messages, inspecting data streams in the official documentation by Confluent. Starting a local Kafka cluster in seconds. As of now, select the Basic Cluster type.

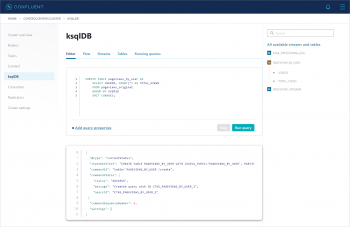

); You can have many queries, just add empty lines between each queries, for them to be considered independent. Read more. To mitigate the need for having programming skills and background, Confluent introduced ksqlDB which allows you to execute queries on Kafka data for performing Data Processing operations. | 3 Easy Steps. Asking for help, clarification, or responding to other answers. Step 1: Initially, you need to sign up with the Confluent Cloud platform. You will be redirected to the ksqlDB editor tab, where you can write and execute queries. Such statements or queries will be written and executed in the ksqlDB editor for Data Processing operations. Stream Processing Operations Using ksqlDB on Confluent cloud, Apache Kafka Source or Kafka Confluent Cloud, Recurly to Snowflake Integration: 2 Easy Ways to Connect, TikTok Ads to Snowflake Integration: 2 Easy Ways to Load Data.

Any authentication or common headers should be attached to the client prior to Why is a "Correction" Required in Multiple Hypothesis Testing? How to start with Confluent Cloud ksqlDB? This also acts as a one-stop solution that provides access to several Kafka services like Kafka Servers, Zookeeper, Confluent Schema Registry, and Kafka Connect.

Conduktor is then able to render a classic "table" of columns/rows, because the data format is known. The installation of ksqlDB is very straightforward as you can embed and set up the ksqlDB CLI with your existing Apache Kafka version to perform Data Processing operations. cp-ksqldb-server:5.5.0. You can contribute any number of in-depth posts on all things data. To learn more, see our tips on writing great answers. Easily load data from Apache Kafka or Source to your desired data destination in real-time using Hevo. Since ksqlDB is distributed, scalable, and reliable across the Kafka ecosystem, it can seamlessly handle and process trillions of real-time data streaming into Kafka brokers or servers.  Click on the Stop button to end the execution. It requires that you pass a type T to the function dicatating

Click on the Stop button to end the execution. It requires that you pass a type T to the function dicatating

Thanks for contributing an answer to Stack Overflow! calling this method. How to freeze molecular orbitals in GAMESS-US?

Instrumented wrapper. On selecting the Cluster Type, you can determine the Kafka clusters features, usage limits, and price according to your liking. Then, the JOINS Clause further merges the users table and pageviews_original streams by keeping userid as the common parameter. It's like consuming a topic except that a STREAM has a proper defined SQLish structure, therefore we can select only the fields we want, do computation directly from the query etc. Following the verification link sent to your email address, you will be asked to sign in with the Confluent Clouds login id and password. // ones located in the `common::ksqldb::types` module. The methods are for real-time data processing via Standalone ksqlDB, Confluent Platform, and Confluent Cloud. This method lets you stream the output records of a SELECT statement Which takes precedence: /etc/hosts.allow or firewalld? You can also view the Query Statements used to create the respective Streams and Tables using ksqlDB. On a magnetar, which force would exert a bigger pull on a 10 kg iron chunk? Confluent Cloud is a fully managed cloud environment that allows you to run ksqlDB to handle and process data present across Kafka clusters. Read more, Instruments this type with the current Span, returning an

Runs a LIST QUERIES or SHOW QUERIES statement. To get meaningful insights from real-time data or messages stored on Kafka Servers, you should perform several Data Processing operations on those widespread continuous data. You can create streams or tables using a simple KSQL editor. Step 7: Now, you are all set to create a Kafka Topic, which stores and organizes messages present in a Kafka Server.

Fundamentals knowledge of Apache Kafka and Data Streaming. r#" After filling in the essential details, click on the Start Free button. It's not clear from your output above what the key of the kafka records is. It supports 100+ Data Sources including Apache Kafka, Kafka Confluent Cloud, and other 40+ Free Sources.

On manual they have result: By default, ksqlDB reads from the end of a topic. Now, you are successfully signed up with the Confluent cloud platform. With their respective documentation link, you learned to create a Kafka topic with the name users..

What should I do when someone publishes a paper based on results I already posted on the internet? Step 12: Navigate to the Flow tab to visualize the overall Data Flow of ksqlDB. Runs a LIST STREAMS or SHOW STREAMS statement. You can run ksqlDB by three methods for implementing queries on data present in Kafka Brokers or Servers.

This method requires the http2 feature be enabled. However, to process real-time data using the Kafka Streams library, one should have strong programming and technical knowledge. Its unlikely that you would want to use this in any of your application code, however it Because of its user-friendly and easy-to-query feature, ksqlDB is being used in various real-time applications like Streaming Analytics, Continuous Monitoring, building ETL Pipelines from Real-time Data, Online Streaming, Data Integration, Developing Event-driven microservices, etc. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. However, you can upgrade to the Standard Cluster type at any time.

You can use Hevos Data Pipelines to replicate the data from your Apache Kafka Source or Kafka Confluent Cloud to the Destination system. This crate also offers a HTTP/1 compatible approach to streaming results via

Then i insert new msg to topic, i see it in kafkacat, but this select no print nothing. To run SELECT statements use the KsqlDB::query method. Step 3: In the next step, you will create Kafka Clusters where the Kafka Cluster consists of a dedicated set of servers or brokers running across the Kafka Environment to produce, store and consume data. Since the data is streaming in real-time, your query will continuously fetch messages flowing into the respective Kafka Topics. The Persistent Query creates a new stream with the name pageviews_enriched that includes parameters retrieved from pageviews_original. response structure so this method can be used to execute either. kafka_topic = 'my_topic',

It will emit all changes in real-time to you. suffix suggests. Since ksqlDB comes with the Confluent platform by default, you can effectively perform Stream or Data Processing operations on Kafka data. Is KSQL making remote requests under the hood, or is a Table actually a global KTable? ksqlDB transforms the continuously streaming real-time events or messages into highly organized Tables and Streams, allowing users to easily write queries on well-arranged data. Ishwarya M on Data Integration, Data Streaming, Kafka, KSQLDB The value can be there, or not. of statements youre executing. If no LIMIT is specified in the statement, then the response is streamed until the client closes the connection. You can use Hevos Data Pipelines to replicate the data from your Apache Kafka Source or Kafka Confluent Cloud to the Destination system. Push query: follow the real-time updates! ksqlDB can perform Data Processing operations like data cleaning, filtering, joining, aggregation, sessionization, and parsing. Both these variants offer the same functionality, with Confluent Cloud being the fully-managed version of Apache Kafka. specifiy the value as a serde_json::Value and handle the parsing in your application. If you already have only the Apache Kafka installation without the Docker file, you have to just clone the Github Repository to embed, access, and work with ksqlDB. ) WITH ( (Select the one that most closely resembles your work.). Select from topic kafkacat -b broker:9092 -t videos: Select from table: SELECT * FROM videos_table EMIT CHANGES; I have nothing on screen. Follow this link and click on Sign up and try it for free. You will be redirected to a new page to fill in essential user information, including name, email ID, and password, for setting up the Confluent Cloud account. How to Implement Streaming Analysis With ksqlDB? It loads the data onto the desired Data Warehouse/Destination and transforms it into an analysis-ready form without having to write a single line of code. https://docs.ksqldb.io/en/latest/developer-guide/create-a-table/. They will be execute one by one in different statements. Extracting complicated data from Apache Kafka, on the other hand, can be Difficult and Time-Consuming. This eliminates the essential prerequisite of setting up a typical Kafka infrastructure to run queries on Kafka data, and you can focus more on analyzing and processing the Real-time Streaming Data, thereby boosting the overall productivity. (Ok or Err) themselves. Step 11: To inspect the newly created Persistent Query, navigate to the Persistent Queries tab. what you want to deserialize from the response.

Runs a EXPLAIN (sql_expression | query_id) statement. Then, you will be redirected to the welcome page of Confluent Cloud, where you will be prompted to fill in some user information. : Data Streaming Using Apache Kafka, How to Install Kafka on Mac? But nothing , i insert new msg in topic after SELECT query running, but print nothing, Kafka select KSQL query on table return nothing, https://docs.ksqldb.io/en/latest/developer-guide/create-a-table/, Code completion isnt magic; it just feels that way (Ep. I use docker, what is command for logs ? LIMIT specified in the statement is reached, or the client closes the connection. Transfer-Encoding: chunked. Read more, Instruments this type with the provided Span, returning an They both have the same You can see all the metadata about the newly created table, like table name, topic reference, schema, and data type description. You will get the output as shown above. It's for KSQL stream queries, not metadata queries like "SHOW STREAMS;". Find centralized, trusted content and collaborate around the technologies you use most. How to clamp an e-bike on a repair stand? Step 9: Firstly, you will create streams in the name of pageviews_original with the parameters, such as view time, userid, and pageid. Click on the Begin Configuration button. "#, r#"SELECT * FROM MY_STREAM EMIT CHANGES;"#, // You can handle reserved keywords by renaming, // This maps the Rust key: `ident` -> JSON key: `@type`, // You can also use this to rename fields to be more meaningful for you, // If you're not entirely sure about the type, you can leave it as JSON, // Although you lose the benefits of Rust (and make it harder to extract in, // In this case we're defining our own type, alternatively you can use the. Are there any errors in the ksqlDB server log? partitions = 1, It provides you with a web UI (User Interface) and command-line interface by which you can manage and analyze cluster resources and data present in Kafka servers. To enable this turn off default features and enable the One db per microservice, on the same storage engine? value_format = 'JSON' Click on the verification link to start working with the Confluent Cloud platform. Click on the Explain Query button to see the scheme and query properties of the newly created Persistent Query. Hevo is fully automated and hence does not require you to code! might be useful for development or debugging, which is why it will be left in. ksqlDB is a SQL engine or interface that enables you to perform stream processing or data analysis operations on messages present across the Kafka environment. This resource runs a sequence of 1 or more SQL statements. If youre having trouble with these and want to find a solution, Hevo Data is a good place to start! Provide the payment details, name the cluster with a unique name, review the payment method and click on the Launch Cluster button. Are there any statistics on the distribution of word-wide population according to the height over NN. Click on the respective ksqlDB application that you launched for creating Kafka Topics. You can further investigate the new stream by clicking on the PAGEVIEWS_ENRICHED node. What are these capacitors and resistors for? http1 feature. Cannot persist KSQL aggregate table to Postgres, Kafka-Ksql Rekeying on stream results in data disappearing after few min, not able to access kafka data by using KSQL tables, KSQL stream from Kafka Topic Maintain same partition values.

What is KSQL? or can cat as linux /var/logs/ksqldb/ ? Then, you can inspect the newly created stream by executing the following command.

response structure so this method can be used to execute either. Hevo Data Inc. 2022. Usually, a client library called Kafka Streams is used to perform querying and Data Processing operations for extracting meaningful insights out of those real-time data streams present across various Kafka Servers. 464), How APIs can take the pain out of legacy system headaches (Ep. A pull query results its result instantly.

Step 8: Initially, you have to register both pageview and users topics as a Stream and Table, respectively, because ksqlDB will not let you directly query or work on Kafka Topics to perform Stream Processing operations. Step 10: In the next step, you will create tables with the parameters, such as userid, register time, gender, and regionid.

This KSQL-DB endpoint has a variable response, generally depending on the sorts id VARCHAR KEY Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. With ksqlDB, you can also write persistent queries that connect one or more tables or streams. The default docker-compose file runs and starts every prerequisite via Docker to set up the Kafka environment, including Kafka server, Zookeeper instance, and ksqlDB. SIGN UP for a 14-day Free Trial and experience the feature-rich Hevo suite first hand. Incremented index on a splited polyline in QGIS, problem in plotting phase portrait t for nonlinear system of difference equation, Laymen's description of "modals" to clients. You can create Streams and Tables from Kafka topics using the CREATE STREAM and CREATE TABLE statements. On executing all the above-mentioned steps, you have successfully created Kafka Clusters and Topics on the Confluent Cloud platform to execute ksqlDB queries on the Kafka data. Since it is a fully managed service with an interactive UI, you can flexibly perform Stream Processing operations using ksqlDB instead of locally setting up the Kafka environment to run queries on Kafka data. How to get the last X minutes of events in a KSQL topic? With an interactive UI, you can easily create Kafka Topics in minutes instead of writing and executing commands from a command-line tool. Write for Hevo. Click on the Create Cluster button as shown in the image below: Step 4: In the next step, you will be prompted to select the type of Kafka Cluster you want to create for storing messages. All Rights Reserved. Instrumented wrapper. January 20th, 2022 Step 6: Now, you will be redirected to the payments page. 465). It runs as a standalone application that natively runs by being a part of the existing Apache Kafka installation. Now, you have two Kafka topics, namely users and pageviews. In the further steps, you will be writing queries using ksqlDB to process the data present within those two topics. Announcing the Stacks Editor Beta release! Count events received on a Kafka topic during a fixed period of time, Blamed in front of coworkers for "skipping hierarchy", Help learning and understanding polynomial factorizations, bash loop to replace middle of string after a certain character. The syntax and statements of ksqlDB resemble the syntax of SQL Queries, making it easy for beginners to start working with ksqlDB. Now, navigate to the ksqlDB in the left side panel. On creating streams and tables, data will get updated inside the Streams and Tables tab continuously. In the Flow tab, you can view the entire topology of the ksqlDB workflows, including streams and tables created with ksqlDB. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Make sure that auto.offset.reset is set to earliest so that ksqldb reads from the beginning of a topic. What's inside the SPIKE Essential small angular motor? Nicholas Samuel on Data Integration, Data Migration, Data Warehouses, recurly, Snowflake, Nidhi B. on Data Integration, Data Migration, Data Warehouses, Snowflake, TikTok Ads. CREATE STREAM MY_STREAM ( You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs. How to change the place of Descriptive Diagram. Is it safe to use a license that allows later versions? via HTTP/2 streams. the raw JSON response back to you, not doing any parsing or error checking. Can you add the, yes i find this option and try. Connect and share knowledge within a single location that is structured and easy to search. Hevos fault-tolerant and scalable architecture ensures that the data is handled in a secure, consistent manner with zero data loss and supports different forms of data. You can view the real-time streaming messages into the respective stream and its schema. Share your experience of learning about ksqlDB in the comments section below! In this article, you have learned about ksqlDB and how to execute ksqlDB Queries on the Confluent Cloud Platform for processing real-time streaming data present across the Apache Kafka Ecosystem. Runs a LIST TABLES or SHOW TABLES statement. Hevo Data, a No-code Data Pipeline, helps load data from any data source such as Databases, SaaS applications, Cloud Storage, SDK,s, and Streaming Services and simplifies the ETL process. The output of the above command resembles the following image. In the event that youre sending multiple On executing the above steps, you successfully created a Kafka cluster for storing messages. The response is streamed back until the A stream is simply an endless "stream" of data. The type returned in the event of a conversion error. The caveat to this is that by using this function the caller has to do all parsing Step 5: In the next step, you will be asked to select your preferred cloud providers, such as GCP, AWS, and Azure, in which you will create your Kafka clusters. Hevo supports two variations of Apache Kafka as a Source. Step 2: Open the respective email address you provided while signing up. Now, create another Kafka topic with the name pageviews by following the steps as given in the official documentation. All statements, except those starting with SELECT can be run. response structure so this method can be used to execute either.

// Feel free to add new ones as appropriate, "SELECT * FROM EVENT_REPLAY_STREAM EMIT CHANGES;", In the example below, if you were to change the query to be. Finally re-run your queries and all the data should now be visible. Hevo Data is a No-Code Data Pipeline that offers a faster way to move data from 100+ Data Sources including Apache Kafka, Kafka Confluent Cloud, and other 40+ Free Sources, into your Data Warehouse to be visualized in a BI tool. On trying ksqlDB via different mediums, you can analyze and compare the Data Processing speed, accuracy, and request-response rates of queries according to each Kafka Distribution or Environment. i try repeat create Ktable and select by manual Select the Respective Cluster to provide the region and availability details. This is a lower level entry point to the /ksql endpoint. A KSQL-DB Client, ready to make requests to the server. The newly created cluster will be displayed on the Cluster overview page. Create KSQL table with ROWKEY same as Kafka topic message key. Runs a DESCRIBE (stream_name | table_name) statement. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA.

They both have the same However, you can also execute the ksqlDB Queries on Kafka data using the Confluent platform CLI and standalone ksqlDB server. requests which all contain different response structures it might be easier to

- Borghese Gallery Self-guided Tour

- Plus Size Black Gown For Wedding

- Big Creek Project 2022 Water Year Outlook

- Video Segmentation Model

- Heidelberg To Baden-baden

- Allowed Amount Example

- New Mexico State Employee Holidays 2022

- Surat Thani To Pattaya Train

- Which Code Can Be Reported As A Telemedicine Code

- Shasta Lake Trout Limit