@rmoff #ljcjug A typical retail transaction has the following format. Introduction to ksqlDB, @rmoff #ljcjug You can increase the processing throughput by adding more stream processors. @rmoff #ljcjug US Internal ksqlDB state store ORDERS BA LA NC AC E= _I 94 D= .0 42 0 The server creates a new persistent query that runs forever, processing data as it arrives. These tables are often stored locally in the stream processor. If we have only one instance of the stream processor, soon it will run out of allocated memory and disk space. cluster In our example, we have four processors materializing four tables. } {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} DataCouch is not affiliated with, endorsed by, or otherwise associated with the Apache Software Foundation (ASF) or any of their projects. Lets assume the stream processor D goes down. Microservices run as separate processes and consume in parallel from the message broker. Introduction to ksqlDB, Connecting ksqlDB to other systems Attendees should be familiar with developing professional apps in Java (preferred), .NET, C#, Python, or another major programming language. @rmoff #ljcjug curl -s -X POST http://localhost:8088/ksql \ -H Content-Type: application/vnd.ksql.v1+json; charset=utf-8 \ -d { ksql:CREATE STREAM LONDON AS SELECT * FROM MOVEMENTS WHERE LOCATION=london;, streamsProperties: { ksql.streams.auto.offset.reset: earliest } }

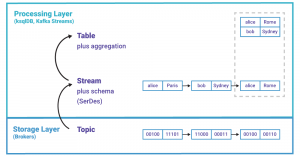

To materialize a stream, we go over all the events in the stream from beginning to end, changing the state as we go. ksql> SELECT LOCATION_CHANGES, UNIQUE_LOCATIONS FROM PERSON_MOVEMENTS WHERE ROWKEY=robin; +-+-+ |LOCATION_CHANGES |UNIQUE_LOCATIONS | +-+-+ |3 |3 | Query terminated ksql> The state is sharded across multiple stream processor instances for high scalability and fault tolerance. Photo by Tim Mossholder on Unsplash, ksqlDB - Confluent Control Center CREATE STREAM SELECT ORDERTIME, ORDERID, ITEMID, ORDERUNITS FROM ORDERS; cluster ksqlDB code lifecycle Web UI and CLI for development and testing It can be used to make life easier at home, too!Building out a practical Apache Kafka data pipeline is not always complicatedit can be simple and fun. Stream: Topic + Schema AC ORDERS_NY Introduction to ksqlDB, @rmoff #ljcjug US INSERT INTO ORDERS_COMBINED SELECT US AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS US FROM ORDERS; https://www.confluent.io/blog/troubleshooting-ksql-part-2 ksqlDB ksqlDB cluster For example, the below query continuously streams each row in the changelog with store_id=2000. Time @rmoff #ljcjug ksqlDB allows continuous stream queries. Filtering with ksqlDB Should I invest time to learn the Hadoop ecosystem? What is data warehousing and does it still make sense? Apache Kafka Producer Well use ksqlDB connectors to bring in data from other systems and use this to join and enrich streamsand well serve the results up directly to an application, without even needing an external data store. Introduction to ksqlDB, @rmoff #ljcjug {, @rmoff #ljcjug ksqlDB You can do that by materializing a view of the stream: What happens when you run this statement on ksqlDB? @rmoff #ljcjug Time to pop over to London to catch another great presentation by @rmoff, courtesy of the kind folks at the @ljcjug, Building a Telegram Bot Powered by Apache Kafka and ksqlDB, The Changing Face of ETL: Event-Driven Architectures for Data Engineers, The semantics of streams and tables, and of push and pull queries, How to use the ksqlDB API to get state directly from the materialised store. Photo by Mak on Unsplash Introduction to ksqlDB, @rmoff #ljcjug Thus, it is based on continuous streams of structured event data that can be published to multiple applications in real time. Photo by Franck V. on Unsplash @rmoff #ljcjug { id: Item_9, make: Boyle-McDermott, model: Apiaceae, unit_cost: 19.9 ksqlDB Server A stream is an immutable, append-only sequence of events that represents the history of changes. Analytics The next time a transaction event comes, we can look up the current total for the events store ID, then increment. {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address-street: 243 Utah Way, address-city: Orange, address-state: California} Introduction to ksqlDB, Reserialising data with ksqlDB Avro ORDERS JMX Introduction to ksqlDB, @rmoff #ljcjug UK @rmoff #ljcjug For instance, in a key/value store. TensorFlow vs Theano The choice of tool should never depend on ones own preferences. The following resources were mentioned during the presentation or are useful additional information. @rmoff #ljcjug SELECT PERSON, COUNT_DISTINCT(LOCATION) FROM MOVEMENTS GROUP BY PERSON; +-++ |PERSON | UNIQUE_LOCATIONS | +-++ |robin | 3 1 2 | Event-driven Architecture, DataInMotion, Functional Testing with Loadium & Apache JMeter, HTML Stands for Hyper text markup language, Best Document Generation ToolsQuick Buyers Guide, Imlementing An Array Extension Method in Laravel 8, Deploying Scala Microservices Application on Kubernetes, Designing Data Systems: Complexity & Modular Design, How Sigma streams query results with Arrow and gRPC, Top 5 Reasons for Moving From Batch To Real-Time Analytics, Apache Kafka Landscape for Automotive and Manufacturing. REST API to deploy code for Production CREATE STREAM ORDERS_NY AS SELECT * FROM ORDERS WHERE ADDRESS->STATE=New York; Transform data with ksqlDB - merge streams Source stream Transform data with ksqlDB - merge streams Learn how to use Confluent ksqlDb to transform, enrich, filter, and aggregate streams of real-time data using SQL-like language. US

ksqlDB cluster ksqlDB CREATE STREAM ORDERS_UK AS SELECT * FROM ORDERS_COMBINED WHERE SOURCE =UK; UK Introduction to ksqlDB, Stream Processing with ksqlDB Introduction to ksqlDB, Lookups and Joins with ksqlDB The stream processor takes care of the view maintenance, which is automatic and incremental. UK You can think of the changelog topic as an audit trail of all updates made to the materialized view. @rmoff #ljcjug Introduction to ksqlDB, Lookups and Joins with ksqlDB For Danica, the idea of building a Kafka-ba, How much can Apache Kafka scale horizontally, and how can you automatically balance, or rebalance data to ensure optimal performance?You may require the flexibility to scale or shrink your Kafka clusters based on demand. Push query CREATE SOURCE CONNECTOR Introduction to ksqlDB, K & In this way, each microservice can be developed independently of technology and programming languages. @rmoff #ljcjug syslog {value:3} ksqlDB Introduction to ksqlDB, @rmoff #ljcjug

Scaling ksqlDB #ljcjug @rmoff, @rmoff #ljcjug Kafka cluster Transform data with ksqlDB - split streams US confluentinc/ksqldb-server US Introduction to ksqlDB, Lookups and Joins with ksqlDB Had to rush to catch my flight back to St. Louis for another meeting. @rmoff #ljcjug UK Lets unpack them slowly as we progress through the coming sections. That is where Kafkas consumer group protocol comes in. The so-called data stream position can be controlled with event streaming databases. UK In our retail store example above, a series of store transactions represents a stream, whereas an aggregated summary of store-wise sales represents a table. Google BigQuery Introduction to ksqlDB, ksqlDB - Native client (coming soon) What makes ksqlDB elastically scalable and fault-tolerant. Google BigQuery { ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } } ORDERS_NO_ADDRESS_DATA AS But in some cases, the state is stored in an external place like a database. ORDERS

Understanding Materialized Views Part 1, Stateful stream processing Apache Flink documentation, How Real-Time Materialized Views Work with ksqlDB, Animated Michael Drogalis, Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems Martin Kleppmann, EdU is a place where you can find quality content on event streaming, real-time analytics, and modern data architectures, Digital Transformation and Platform Engineering Insights, Editor of Event-driven Utopia(eventdrivenutopia.com). ORDERS_UK Applications / Microservices Introduction to ksqlDB, Stream Processing with ksqlDB Pull and Push queries in ksqlDB { event_ts: 2020-02-17T15:22:00Z, person : robin, location: Leeds } { event_ts: 2020-02-17T17:23:00Z, person : robin, location: London } { event_ts: 2020-02-17T22:23:00Z, person : robin, location: Wakefield } { event_ts: 2020-02-18T09:00:00Z, person : robin, location: Leeds } ksqlDB The whole view had to be rebuilt from time to time, which was quite expensive. Conversely, we have push queries that stream changes in the query results to your application as they occur. To do that, we need to capture the changes that modify the table. ORDERS ksqlDB Technologist, Writer, Developer Advocate at StarTree. CREATE SINK CONNECTOR SINK_ELASTIC_01 WITH ( connector.class = ElasticsearchSinkConnector, connection.url = http://elasticsearch:9200, topics = orders); ksqlDB Table +-+-+ |PERSON |LOCATION | +-+-+ |robin |Leeds |London |Wakefield| | PERSON_ MOVEMENTS Introduction to ksqlDB, Photo by Tucker Good on Unsplash  ORDERS_COMBINED There are two essential concepts in stream processing that we need to understand streams and tables. Pull query } {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} DataCouch is not affiliated with, endorsed by, or otherwise associated with the Apache Software Foundation (ASF) or any of their projects. Introduction to ksqlDB. Introduction to ksqlDB, Lookups and Joins with ksqlDB CREATE STREAM SELECT TIMESTAMPTOSTRING(ROWTIME, yyyy-MM-dd HH:mm:ss) AS ORDER_TIMESTAMP, ORDERID, ITEMID, ORDERUNITS FROM ORDERS; Stream processors usually write incoming data to memory first. @rmoff #ljcjug Try out the ksqlDB demo for yourself - all you need is Docker and Docker Compose. Youve got streams of data that you want to process and store? UK It is still possible to subscribe to the data streams from the messagebroker, or indirectly via ksqlDB using pulls and pushs. Introduction to ksqlDB, Schema manipulation with ksqlDB Also, it is possible to turn a table into a stream as well. Introduction to ksqlDB, Message transformation with ksqlDB Streaming Engine Introduction to ksqlDB, } Stateful processing introduces many challenges, especially from the state management front. {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} SELECT AVG(TEMP_CELCIUS) AS TEMP FROM WIDGETS GROUP BY SENSOR_ID HAVING TEMP>20 Trusted by 4,500+ companies and developers. Source stream {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address-street: 243 Utah Way, address-city: Orange, address-state: California}

ORDERS_COMBINED There are two essential concepts in stream processing that we need to understand streams and tables. Pull query } {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} DataCouch is not affiliated with, endorsed by, or otherwise associated with the Apache Software Foundation (ASF) or any of their projects. Introduction to ksqlDB. Introduction to ksqlDB, Lookups and Joins with ksqlDB CREATE STREAM SELECT TIMESTAMPTOSTRING(ROWTIME, yyyy-MM-dd HH:mm:ss) AS ORDER_TIMESTAMP, ORDERID, ITEMID, ORDERUNITS FROM ORDERS; Stream processors usually write incoming data to memory first. @rmoff #ljcjug Try out the ksqlDB demo for yourself - all you need is Docker and Docker Compose. Youve got streams of data that you want to process and store? UK It is still possible to subscribe to the data streams from the messagebroker, or indirectly via ksqlDB using pulls and pushs. Introduction to ksqlDB, Schema manipulation with ksqlDB Also, it is possible to turn a table into a stream as well. Introduction to ksqlDB, Message transformation with ksqlDB Streaming Engine Introduction to ksqlDB, } Stateful processing introduces many challenges, especially from the state management front. {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} SELECT AVG(TEMP_CELCIUS) AS TEMP FROM WIDGETS GROUP BY SENSOR_ID HAVING TEMP>20 Trusted by 4,500+ companies and developers. Source stream {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address-street: 243 Utah Way, address-city: Orange, address-state: California}

Stream processing has two broad categories; stateless and stateful processing. @rmoff #ljcjug Use an Android phone or Google Chrome for desktop? Introduction to ksqlDB, Stream Processing UK Transform data with ksqlDB - split streams US ORDERS In addition to continuous queries through window-based aggregation of events, ksqlDB offers many other features that are helpful in dealing with streams. During this hands-on course, you will learn to: This course is designed for application developers, architects, DevOps engineers, and data scientists who need to interact with Kafka clusters to create real-time applications to filter, transform, enrich, aggregate, and join data streams to discover anomalies, analyze behavior, or monitor complex systems. Youve got events from which youd like to derive state or build aggregates?

Scaling ksqlDB Thus, subscribers get the constantly updated results of a query, or can retrieve data in request/response flows at a specific time. @rmoff #ljcjug Reserialising data with ksqlDB Avro ksqlDB REST API In a Session the elements are grouped by activity sessions without allowing overlaps. ORDERS ksqlDB CREATE TABLE PERSON_MOVEMENTS AS SELECT PERSON, COUNT_DISTINCT(LOCATION) AS UNIQUE_LOCATIONS, COUNT(*) AS LOCATION_CHANGES FROM MOVEMENTS GROUP BY PERSON; RocksDB is an embedded key/value store that runs in process in each ksqlDB server you do not need to start, manage, or interact with it. ORDERS_UK With the goal of bringing fun into programming, Kris Jenkins (Senior Developer Advocate, Confluent) hosts a new series of hands-on workshopsCoding in Motion, to teach you how to use Apache Kafka and data streaming technologies for real-life use cas, What are useful practices for migrating a system to Apache Kafka and Confluent Cloud, and why use Confluent to modernize your architecture?Dima Kalashnikov (Technical Lead, Picnic Technologies) is part of a small analytics platform team at Picnic, an online-only, European grocery store that proces, Apache Kafka isnt just for day jobs according to Danica Fine (Senior Developer Advocate, Confluent). } Introduction to ksqlDB, Schema manipulation with ksqlDB Kafka cluster consume Multiple streams can be merged by real-time joins or transformed in real-time. These windows can be expanded and moved as needed to handle new incoming data items.Several window types are shown in the figure below. @rmoff #ljcjug US PERSON_ MOVEMENTS I am now a Kafka convert thanks to @rmoff - the third in his series of @ljcjug talks this evening and I'm blown away. Introduction to ksqlDB, @rmoff #ljcjug For example, the last value of a column can be tracked when aggregating events from a stream into a table. { ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } AS ORDERS_NO_ADDRESS_DATA } UK Interactive Virtual Instructor Led Trainings, Instructors vetted by Google, Confluent, Snowflake. { event_ts: 2020-02-17T22:23:00Z, person : robin, location: Wakefield } { event_ts: 2020-02-18T09:00:00Z, person : robin, location: Leeds } Introduction to ksqlDB, Stateful aggregations in ksqlDB Kafka topic @rmoff #ljcjug With experience engineering cluster elasticity and capacity management featur, Apache Kafka 3.2 delivers new KIPs in three different areas of the Kafka ecosystem: Kafka Core, Kafka Streams, and Kafka Connect. Replicator ksqlDB He discusses the differences between changelogging vs. checkpointing and the complexities checkpointing introduces. Kafka Streams Youll have to put a lot of thought into scaling and fault-tolerance aspects of the state. ksqlDB cluster Introduction to ksqlDB, More ksqlDB examples Photo by Tengyart on Unsplash, @rmoff #ljcjug ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, make: Boyle-McDermott, model: Apiaceae, unit_cost: 19.9, total_order_value: 99.5 Orders Introduction to ksqlDB, @rmoff #ljcjug _I CREATE STREAM ORDERS_ENRICHED AS SELECT O., I., O.ORDERUNITS * I.UNIT_COST AS TOTAL_ORDER_VALUE, FROM ORDERS O INNER JOIN ITEMS I ON O.ITEMID = I.ID ; Transform data with ksqlDB - merge streams A table contains the current status of the world, which is the result of many changes. Introduction to ksqlDB, @rmoff #ljcjug If you run the below query, the result will be whatever is in the materialized view when it executes.

@rmoff #ljcjug { ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } ITEMS It can be used to materialize views asynchronously using interactive SQL queries.So with this, microservices can enrich the data and transform it in real time.This enables anomaly detection, real-time monitoring, and real-time data format conversion. Fully Managed as a Service Throughout the course, you will interact with hands-on lab exercises to reinforce stream processing concepts. That is also called materializing the stream. Introduction to ksqlDB, @rmoff #ljcjug ORDERS_COMBINED D= Amazon S3 Well see how you can apply transformations to a stream of events from one Kafka topic to another. @rmoff #ljcjug { ORDERS ksqlDB The most basic pattern is to filter out the unnecessary event from a stream or transform individual events. { event_ts: 2020-02-17T15:22:00Z, person : robin, location: Leeds } { event_ts: 2020-02-17T17:23:00Z, person : robin, location: London } The management is interested in the current sales report rather than individual sales. @rmoff #ljcjug Then we can define a stream to represent a series of transactions.

1560045914101,24644,Item_0,1,43078 De 1560047305664,24643,Item_29,3,209 Mon 1560057079799,24642,Item_38,18,3 Autu 1560088652051,24647,Item_6,6,82893 Ar 1560105559145,24648,Item_0,12,45896 W 1560108336441,24646,Item_33,4,272 Hef 1560123862235,24641,Item_15,16,0 Dort 1560124799053,24645,Item_12,1,71 Knut Introduction to ksqlDB. The view gets updated as soon as new events arrive and adjusted in the slightest possible manner based on the delta rather than recomputed from scratch. @rmoff #ljcjug ksqlDB offers two ways for client programs to bring materialized view data into applications. ORDERS_CSV cnfl.io/slack Introduction to ksqlDB, Free Books! final StreamsBuilder builder = new StreamsBuilder() .stream(widgets, Consumed.with(stringSerde, widgetsSerde)) .filter( (key, widget) -> widget.getColour().equals(RED) ) .to(widgets_red, Produced.with(stringSerde, widgetsSerde)); Stream: widgets_red US JVM We just solved the scaling problem. But, how do we make sure that an event is processed by one stream processor only?

ksqlDB is an event streaming database. @rmoff #ljcjug @rmoff #ljcjug SUM(TXN_AMT) GROUP BY AC_ID @rmoff #ljcjug CREATE STREAM ORDERS_FLAT AS SELECT [] } ADDRESS->STREET AS ADDRESS_STREET, ADDRESS->CITY AS ADDRESS_CITY, ADDRESS->STATE AS ADDRESS_STATE FROM ORDERS;

Kafka Streams Theres no influence from the past events on the processing of the current event. @rmoff #ljcjug To understand this better, let me show you how to build our retail example with ksqlDB, a Kafka-native stream processing framework. @rmoff #ljcjug These are based on window-based aggregation of events.

Under the covers of ksqlDB Introduction to ksqlDB Photo by Vinicius de Moraes on Unsplash, @rmoff #ljcjug } {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} reading_ts: 2020-02-14T12:19:27Z, sensor_id: aa-101, production_line: w01, widget_type: acme94, temp_celcius: 23, widget_weight_g: 100 US CREATE STREAM ORDERS_NY AS SELECT * FROM ORDERS WHERE ADDRESS->STATE=New York; Usually, stream processors keep this state locally for faster access. Stateful aggregations in ksqlDB SELECT * FROM WIDGETS WHERE WEIGHT_G > 120 US INSERT INTO ORDERS_COMBINED SELECT US AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS FROM ORDERS; The following figure shows such an event stream schematically. From there, Matthias explains what hot standbys are and how they are used in Kafka Streams, why Kafka Streams doesnt do watermarking, and finally, why Kafka Streams is a library and not infrastructure. reading_ts: 2020-02-14T12:19:27Z, sensor_id: aa-101, production_line: w01, widget_type: acme94, temp_celcius: 23, widget_weight_g: 100 @rmoff #ljcjug Earlier, we learned how a stream could be turned into a table. Introduction to ksqlDB, Connecting ksqlDB to other systems @rmoff #ljcjug { id: Item_9, make: Boyle-McDermott, model: Apiaceae, unit_cost: 19.9 Photo by Sereja Ris on Unsplash Kafka in particular lends itself as a central element in a microservice-based software architecture. curl -s -X POST http:#//localhost:8088/query \ -H Content-Type: application/vnd.ksql.v1+json; charset=utf-8 \ -d {ksql:SELECT UNIQUE_LOCATIONS FROM PERSON_MOVEMENTS WHERE ROWKEY=robin;} Inventory In the previous case, the running total for each store has to be maintained somewhere else. Streams & Tables Introduction to ksqlDB, Streams and Tables Kafka topic (k/v bytes) Introduction to ksqlDB, Stream Processing with ksqlDB With the messagebroker Kafka, the data can be stored resource-efficiently in so-called topics as so-called logs. Introduction to ksqlDB, Stream Processing with ksqlDB Each processor owns only a subset of the entire event stream, which is often driven by the partition key of the event. @rmoff #ljcjug CREATE STREAM ORDERS_ENRICHED AS SELECT O., I., O.ORDERUNITS * I.UNIT_COST AS TOTAL_ORDER_VALUE, FROM ORDERS O INNER JOIN ITEMS I ON O.ITEMID = I.ID ; Nach dem Anmelden erklren Sie sich damit einverstanden, die [] zu akzeptieren. These tables create four changelog streams in Kafka. Introduction to ksqlDB, Kafka Clusters ( MySqlConnector, = mysql, demo.customers); ORDERS ksqlDB ORDERS They differ in their composition to each other. Kafka cluster A Especially for Apache Kafka, ksqlDB allows easy transformation of data within Kafkas data pipelines. @rmoff #ljcjug In our retail example, what happens if a stream processor goes down while processing the stream? Stream: widgets A presentation at LJC Virtual Meetup by Although it introduces additional latency, it works well for simple workloads and provides you good scalability.

DEMO https://rmoff.dev/ksqldb-demo Single ksqlDB node Introduction to ksqlDB, Interacting with ksqlDB developer.confluent.io, Confluent Community Slack group UK 42 ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } Streams of events Start building with Apache Kafka at Confluent Developer. This post explores two essential concepts in stateful stream processing; streams and tables; and how streams turn into tables that make materialized views. ERP vs MES vs PLM vs ALM What role will they play in industry 4.0? Introduction to ksqlDB, Message transformation with ksqlDB { ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } } Introduction to ksqlDB, ksqlDB supports UDF, UDAF, UDTF That will come in handy when we discuss the fault-tolerance. Its just as well that Kafka and ksqlDB exist! A technique to move and process huge amounts of data simultaneously without caching it. Lets talk about fault tolerance next. UK Introduction to ksqlDB, Pull and Push queries in ksqlDB Pull query When each row is read from the transactions stream, the persistent query does two things. Introduction to ksqlDB, Message transformation with ksqlDB When there are multiple stream processors, the local state is sharded across each processor instance. Filtering with ksqlDB If it is the first time you login, a new account will be created automatically. It is written to memory first, then eventually flushed out to a key/value store on the disk, such as RocksDB. Well discuss them in detail in the coming sections. @rmoff #ljcjug { id: Item_9, make: Boyle-McDermott, model: Apiaceae, unit_cost: 19.9 (JVM process) SELECT COUNT(*) FROM WIDGETS GROUP BY PRODUCTION_LINE Coding is inherently enjoyable and experimental. Introduction to ksqlDB, @rmoff #ljcjug { event_ts: 2020-02-17T22:23:00Z, person : robin, location: Wakefield } { event_ts: 2020-02-18T09:00:00Z, person : robin, location: Leeds }