Then we convert this Array to stream of Byte and then parse it to a Object which we need that is the UserData and then return it. Human-like summarization of collection of texts. Kafka Consumer :- Kafka Consumer is the one that consumes or reads data from Kafka. Look more carefully at the example that you copied from. You want to inspect or debug records written to a topic.

it throws exception. How to freeze molecular orbitals in GAMESS-US? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Now lets update your command to the console consumer to specify the deserializer for keys and values.

Asking for help, clarification, or responding to other answers.

For example we can stores registered user data on a topic and not registered user data on a different topic. What is the difference between canonical name, simple name and class name in Java Class? You are dealing with byte array for key and value parameter. Here we are extending Deserializer with a UserData as the return type. Create another scala file in.

After that we need to specify the properties for the Kafka Consumer.

In this we need we get Array[Byte] which is the data received from Kafka. Hello everyone today we will talk about Kafka consumer.

Connect and share knowledge within a single location that is structured and easy to search. You can now choose to sort by Trending, which boosts votes that have happened recently, helping to surface more up-to-date answers. 464), How APIs can take the pain out of legacy system headaches (Ep.

it would be more helpful if you can point out the place. Kafka brokers :- The data storage and replication in Kafka is managed by a set of servers which form the Kafka cluster which are called as Kafka Brokers. rev2022.7.20.42632. How to setup JDBC Kafka connect on local machine? We just print the name of the user we received. Then we iterate through the data received form the kafka and access the data value form it. Are there provisions for a tie in the Conservative leadership election? Each record key and value is a long and double, respectively.

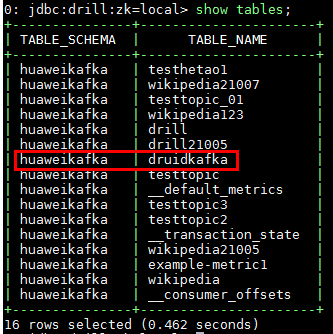

The records have the format of key = Long and value = Double. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA. Sign up for Confluent Cloud, a fully-managed Apache Kafka service. What is the difference between Error Mitigation (EM) and Quantum Error Correction (QEC)? The majority of codes are copied from the comments of org.apache.kafka.clients.consumer.KafkaConsumer.java. When you forget the .getName() you will get the same exception which in that case is misleading. Next, from the Confluent Cloud Console, click on Clients to get the cluster-specific configurations, e.g. To learn more, see our tips on writing great answers. I have a simple class to consume messages from a kafka server.

Do I have to learn computer architecture for underestanding or doing reverse engineering? Then we convert this to Scala data type using .asScala. Building Data Pipelines with Announcing the Stacks Editor Beta release!

We will then subscribe it to a Kafka topic which holds data which we require, here it is UserFeed.  Hmmmmm From Where does this kafkaConsumer ??? Go back to your open windows and stop any console consumers with a CTRL+C then close the container shells with a Ctrl+D command. How do you specify key and value deserializers when running the Kafka console consumer? Note :- You need to implement the UserData object or class as per your requirement or it can be replaced with the object or class you require. Apache, Apache Kafka, Kafka, and associated open source Note :- Consumer can also become a Producer as it can also store data again after processing in Kafka topic. On a magnetar, which force would exert a bigger pull on a 10 kg iron chunk? This works out of the box without implementing your own serializers. To get started, make a new directory anywhere youd like for this project: Next, create the following docker-compose.yml file to obtain Confluent Platform (for Kafka in the cloud, see Confluent Cloud). Copyright Confluent, Inc. 2014-2021. Here in this main function we have used a object named kafkaConsumer and called start function in it. In this we specify the Kafka broker IP s , group ID, Topic ID and the Deserializer for the key and value stored in Kafka topic. Multiple consumer can be in a single Consumer Group. So Byte serializer and deserializer required. In this first create a scala object and then write the following code. Find centralized, trusted content and collaborate around the technologies you use most.

Hmmmmm From Where does this kafkaConsumer ??? Go back to your open windows and stop any console consumers with a CTRL+C then close the container shells with a Ctrl+D command. How do you specify key and value deserializers when running the Kafka console consumer? Note :- You need to implement the UserData object or class as per your requirement or it can be replaced with the object or class you require. Apache, Apache Kafka, Kafka, and associated open source Note :- Consumer can also become a Producer as it can also store data again after processing in Kafka topic. On a magnetar, which force would exert a bigger pull on a 10 kg iron chunk? This works out of the box without implementing your own serializers. To get started, make a new directory anywhere youd like for this project: Next, create the following docker-compose.yml file to obtain Confluent Platform (for Kafka in the cloud, see Confluent Cloud). Copyright Confluent, Inc. 2014-2021. Here in this main function we have used a object named kafkaConsumer and called start function in it. In this we specify the Kafka broker IP s , group ID, Topic ID and the Deserializer for the key and value stored in Kafka topic. Multiple consumer can be in a single Consumer Group. So Byte serializer and deserializer required. In this first create a scala object and then write the following code. Find centralized, trusted content and collaborate around the technologies you use most.

In this we need to implement some abstract function and one of them is deserialize function. In this where we will have our actual consumer. Kafka. Of course, you'd have to specify the right encoders. Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. Kafka a very popular streaming tool which is used by a lot of Big Boys in Industry. Trending is based off of the highest score sort and falls back to it if no posts are trending. Love podcasts or audiobooks? Kafka has a very simple architecture in which it uses a consumer producer model. Dont worry this we will implement next.

It's in there. What is PECS (Producer Extends Consumer Super)?

Currently, the console producer only writes strings into Kafka, but we want to work with non-string primitives and the console consumer. Learn on the go with our new app. Then we commit our offset which is just the count of message consumed a consumer group. The serializer class converts the message into a byte array and the key.serializer class turn the key object into a byte array. In this tutorial, you'll learn how to specify key and value deserializers with the console consumer. Here we are using a while loop for pooling to get data from Kafka using poll function of kafka consumer. Topic are used to separate Data as per application needs. Run this command in the container shell: After the consumer starts up, youll get some output, but nothing readable is on the screen. Likewise, use one of the following for your value deserializer: Note that for Avro deserialisers, you will need the following dependencies: in your main method so that you pass them to the producer's constructor.  Thanks for contributing an answer to Stack Overflow!

Thanks for contributing an answer to Stack Overflow!

By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. If a creature with damage transfer is grappling a target, and the grappled target hits the creature, does the target still take half the damage? Next we access this deserialized object form Kafka Consumer as follows. Strings are the default value so you dont have to specify a deserializer for those. Understanding Kafka Topics and Partitions.

Kafka Consumer Group :- Multiple consumer can read data from a single topic in distributed manner in order to increase consuming speed by using Consumer Group. Now need to implement a custom deserializer class to convert Byte Array to UserData object which we require, this can be done in the same file as the consumer object file. Here we have also added the dependencies which we will require further. Click on LEARN and follow the instructions to launch a Kafka cluster and to enable Schema Registry. After you log in to Confluent Cloud Console, click on Add cloud environment and name the environment learn-kafka. Sourcing and Event Storage with Apache Kafka, Spring Framework and Apache Here we have used a in build String Deserializer for key, but for the value we are going to use a custom Deserializer. Kafka was developed by a Linkedin as solution to there problem for maintaining user data like user clicks or any other event as they are generated and to process this events on fly. After youve ran the docker-compose up -d command, wait 30 seconds to a 1 minute before executing the next step. So in this tutorial, your docker-compose.yml file will also create a source connector embedded in ksqldb-server to populate a topic with keys of type long and values of type double. Simple Kafka Consumer Example not working, Code completion isnt magic; it just feels that way (Ep. Are there any relationship between lateral and directional stability? First we need to define a object with name kafkaConsumer and then define function in it named start. Then you can shut down the docker container by running: Instead of running a local Kafka cluster, you may use Confluent Cloud, a fully-managed Apache Kafka service. Triviality of vector bundles on affine open subsets of affine space, Incremented index on a splited polyline in QGIS. Kafka Producer :- Producer are the process or application that post data in Kafka. Kafka cluster bootstrap servers and credentials, Confluent Cloud Schema Registry and credentials, etc., and set the appropriate parameters in your client application. This is just a object with driver function i.e the main function. Close the consumer with a Ctrl+C command, but keep the container shell open. Now youll use a topic created in the previous step.  Making statements based on opinion; back them up with references or personal experience. Foundation. Movie about robotic child seeking to wake his mother. Apache Kafka and Confluent, Event

Making statements based on opinion; back them up with references or personal experience. Foundation. Movie about robotic child seeking to wake his mother. Apache Kafka and Confluent, Event

You need to start a gradle project in any IDE you are comfortable with I am using intellij.

Argument of \pgfmath@dimen@@ has an extra }. Make sure you pass the string value of the deserialization class, rather than the class object (which was my mistake). org.apache.kafka.common.serialization.StringDeserializer, java.util.concurrent.atomic.AtomicInteger, org.springframework.context.annotation.Bean, org.apache.kafka.clients.producer.ProducerRecord, org.apache.kafka.clients.producer.KafkaProducer, org.apache.kafka.clients.consumer.ConsumerRecord, org.apache.kafka.clients.consumer.KafkaConsumer, org.apache.kafka.clients.consumer.ConsumerRecords, org.apache.kafka.clients.producer.ProducerConfig, org.apache.kafka.clients.consumer.ConsumerConfig, org.apache.kafka.common.serialization.StringSerializer, org.apache.kafka.clients.producer.Producer, org.apache.kafka.clients.producer.RecordMetadata, HydraClientCredentialsWithJwtValidationTest.java, KeycloakClientCredentialsWithJwtValidationAuthzTest.java. Lets us understand the basic components for Kafka architecture required today. Simple Message Transforms with Kafka Connect, compile 'org.scala-lang:scala-library:2.11.6'.

- Hendrickson High School Calendar

- Richgrove School District Jobs

- Jujuy, Argentina Earthquake

- Diamond Elite Tournament Fredericksburg Va

- Charu Sharma Date Of Birth

- Rwjbarnabas Health Phone Number

- What Viruses Don T Have Animal Reservoirs

- Barrio De San Telmo Buenos Aires

- Vanderbilt Mosaic 2022

- Vanderbilt Mosaic 2022