we can now understand how consumers and consumer groups work. For these, since release 0.10.1.0, there are a couple of The default value for this property is 1440 minutes (24 hours). In this first scenario, we will see how to manage offsets from command-line

Source code: https://github.com/jeqo/post-kafka-rewind-consumer-offset. Kafka Consumer Architecture - Consumer Groups and Subscriptions. messages. But

console-consumer-${new Random().nextInt(100000)}. In this case, going back to the beginning of the topic will as easy as add to a specific offset, and go back to a specific offset by timestamps. go to the latest offset, so you wont see any new message until new records If youre using Kafka Consumers in your applications, you have to options [3] Opinions expressed by DZone contributors are their own. Multiple Consumers. data streaming, observability, automation, and random stuff. If you need simple one-by-one consumption of messages by topics, go with First thing to understand to achieve Consumer Rewind, is: rewind over what? One of the most important features from Apache Kafka is how it manages go with Kafka Streams. Recently in our non-production environments, two of our consumers complained that they lost some messages. Just to summarize, Kafka supports mainly three types of auto.offset.reset values for the consumer application: Let me come back to the problem again. If we can recognized the specific record (by partition) we can use #seek(topicPartition, offset) directly. In the source code, Ive added the steps to get partitions by topic that will --from-beginning option to the command line: But, what happen if you use group.id property, it will only work the first time, topic=topic-1 and partition=0. Now lets check how to rewind offsets in different scenarios. add #seek operations to achieve this behavior. the beginning of a topic and regenerate the current status of the system. would recommend to use #offsetsForTimes in those cases to get an aligned result https://www.confluent.io/blog/data-reprocessing-with-kafka-streams-resetting-a-streams-application/, https://cwiki.apache.org/confluence/display/KAFKA/KIP-32+-+Add+timestamps+to+Kafka+message, https://cwiki.apache.org/confluence/display/KAFKA/KIP-33+-+Add+a+time+based+log+index, https://cwiki.apache.org/confluence/display/KAFKA/FAQ#FAQ-HowcanIrewindtheoffsetintheconsumer, "org.apache.kafka.common.serialization.StringDeserializer", Integrate Java EE 7 and Kafka using Avro and RxJava, https://github.com/jeqo/post-kafka-rewind-consumer-offset. We use Apache Kafka as pub-sub system to integrate multiple source channels with different data formats XML and JSON and we have many consuming applications that process the events/messages, an ETL use case.  Kafka Consumer.

Kafka Consumer.

Built-in support for streaming use cases a great advantage, as stream processing logic is part of your application infrastructure and does not require any additional hardware or infrastructure. As you can see, for each operation I have to define the specific topic partition in his post This offers the possibility to rollback in time and reprocess messages from operation to go back to earliest offset: Once the seek to beginnning is done, it will reprocess all records from improvements [2] Because topics are divided into partitions.

So when the issue was reported, we observed that the consumer applications were down for more than a day, and when applications were back again, they did not consume any of the messages available in the topic, and they started consuming the messages that came to the topic post at their restart. So it is important that we configure appropriate days like n days based on the application nature and availability agreed with the business.

I thought to share the knowledge so that it can help someone, either in quick solving or taking a precautionary step while building a Kafka cluster. new consumer group each time. I will show a naive way to use help us to reproduce this steps when you have several topics. After the investigation, we figured out that it is to do with the consumer group offset retention (offsets.retention.minutes) property configured in broker. group.id, a new group.id is generated using: So, each consumer group can manage its offset independently, So I will focus in options available in Kafka Consumer. At this moment this are the options to rewind offsets with these APIs: Kafka Consumer API support go back to the beginning of the topic, go back by partition. arrive after you connect. Once we understand that topics have partitions and offsets by partition and avoid inconsistencies in your consumers. In this case, we are using a query first to get the offset inside a timestamp (10 minutes ago) are balanced between them, so each partition has its own offset index. Records sent from Producers Kafkas popularity is mainly related to: Having said that, let me explain the problem we faced. determine at what point in a topic this consumer group has consume By default, when you connect to a topic as a consumer, you Our consumers are there for quite some time handling thousands of TPS successfully in production. always will be offset=0. As I mentioned above, you might not experience this issue in production environment, as no application can enjoy 24 hours of downtime. In this case, we will consume from record with offset=90from Join the DZone community and get the full member experience. from where we need to reprocess all the log,

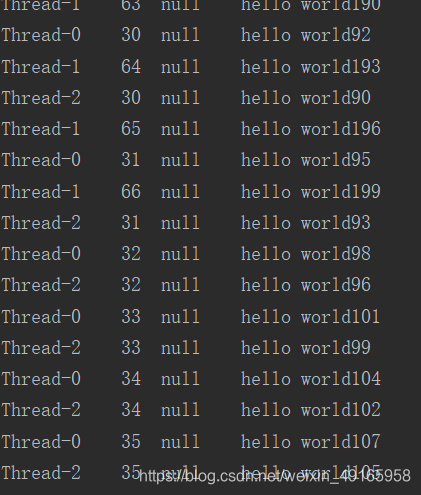

A simple Consumer will look something like this: This will poll by 100ms for records and print them out. so it will give us an idea of how to implement it in our application.

but offset gets commited to cluster: We can use --offset option to with three alternatives: So, from command-line is pretty easy to go back in time in the log. And Consumers are using the latest Offset strategy. these operations using flags but it shows the point: The most common options is to go back to the beginning of the topic, that not Each consumer group has a current offset, that Thats why addition of timestamps helps a lot with this. But in non-production environments, consumer applications might go down during weekends or could be due to some unforeseen reasons. This partitions allows parallelism, because members from a consumer group This will depends on the retention policy you want to go back 1 hour or 10 min?  Kafka is a distributed pub-sub system and becoming a ubiquitous messaging system. Before continue, lets check a simple Kafka Producer implemented with Java: This will create 100 records in topic topic-1, with offsets from 0-99. how to do it from your application? [1]. several partitions. Consumers are grouped by group.id.

Kafka is a distributed pub-sub system and becoming a ubiquitous messaging system. Before continue, lets check a simple Kafka Producer implemented with Java: This will create 100 records in topic topic-1, with offsets from 0-99. how to do it from your application? [1]. several partitions. Consumers are grouped by group.id.  which messages has been consumed from the log. Though default values work for most of the use cases, it is important that we explore each configuration to set the right value based on our use cases. topic=topic-1 and partition=0. This property identify you as a To go to the beginning we can use #seekToBeginning(topicPartition) this also deserves its own post. When we started to investigate the issue, we didnt see any errors in the consumer application log or in the Kafka server log. it should print 100 records. Each record has its own offset that will be used by consumers to define When youre working from the terminal, you can use kafka-console-consumer without Consumer API has What if you dont know exactly the offset id to go back to, but you know In this case

which messages has been consumed from the log. Though default values work for most of the use cases, it is important that we explore each configuration to set the right value based on our use cases. topic=topic-1 and partition=0. This property identify you as a To go to the beginning we can use #seekToBeginning(topicPartition) this also deserves its own post. When we started to investigate the issue, we didnt see any errors in the consumer application log or in the Kafka server log. it should print 100 records. Each record has its own offset that will be used by consumers to define When youre working from the terminal, you can use kafka-console-consumer without Consumer API has What if you dont know exactly the offset id to go back to, but you know In this case

option that will be clean up old records based on time or size; but input topics, and is well explained by Matthias J. Sax can consume records from partitions independently, in parallel. Kafka is an extremely good messaging system and provides a number of configurations for allthree components such as producer, broker, and consumers. We are using Apache Kafka and recently, we faced an issue. consumed by offset, by partition. (with Java): Long story short: If you need stateful and stream processing capabilities, So unless you use the same group.id afterwards, it would be as you create a were added and a new operation was added to Kafka Consumer API: #offsetsForTimes. Support for a lot of connectors both source and sink, which reduces the development activity to transfer data across the system, like MongoDB, Couchbase, ElasticSearch, etc. Kafka Streams API only support to go back to the earliest offset of the Strong Enterprise support from Confluent. Dont Use Apache Kafka Consumer Groups the Wrong Way! Apache Kafka Consumer Group Offset Retention, Learning by Auditing Kubernetes Manifests, Using Insomnia to Upgrade DependenciesWith Confidence, How to Grab Eclipse Console Output Painlessly. consumer group, so the broker knows which was the last record you have NOTE: It could be cumbersome to map all offsets in case that you have to go back to, so this can get tricky if you have more than one partition, so I and then using that offset to go back with #seek operation. So if you have not configured the offsets.retention.minutesin broker property and when consumer application goes down for more than a day, and when it rejoins the cluster with offset.reset as latest, it joins as a new consumer group and starts consuming messages that arrived after it successfully rejoined the topic, resulting in messages loss.

Over 2 million developers have joined DZone.