Each line represents one record and to send it youll hit the enter key. All the properties available through kafka producer properties can be set through this property. We inject the default properties using @Autowired to obtain the KafkaProperties bean. * Additional producer-specific properties used to configure When recording a Kafka script in VuGen, all configuration properties defined by Apache Kafka can be used to configure producers and consumers.

As both of these components are connecting to the same broker, we can declare all the essential properties under spring.kafka section. Step 4: With acks=0 With a value of 0, the producer wont even wait for a response from the broker. Introduction to Kafka Console Producer. When Kafka Producer evaluates a record, it calculates the expression based on record values and writes the record to The Kafka producer has a set of configuration properties with default values. kafka-console-producer You can use a KafkaProducer node in a message flow to publish an output message from a message flow to a specified topic on a Kafka server. Below is an example of running this code on the command line from our bigdatums-kafka-1.0-SNAPSHOT.jar JAR file. Competitive salary. For this, you can set an intuitive Producer Timeout ( KIP-91 Kafka 2.1) such as delivery.timeout.ms=120000 (= 2 minutes). @Bean public By adding the Kafka configuration properties inside the application.properties file of our spring boot application or by creating a configuration class to override the required The code is as follows: public class HelloKafkaProducer { public static void main (String [] args) { //Create a Core Configuration: You can set the bootstrap.servers property so that the producer can find the Kafka cluster. Kafka Producer # Flinks Kafka Producer - FlinkKafkaProducer allows writing a stream of records to one or more Kafka topics. First we need to add the appropriate Deserializer which can convert JSON byte [] into a Java Object. Event Hubs will internally default to a minimum of 20,000 ms. If client authentication is not needed in the broker, then the following is a minimal configuration example: security.protocol = SSL ssl.truststore.location = / var / private /ssl/kafka.client.truststore.jks ssl.truststore.password = test1234. A producer is instantiated by providing a set of key-value pairs as configuration. Also, we will see the concept of Avro schema evolution and set up and using Schema Registry with Kafka Avro Serializers. Spring Kafka: 2.1.4.RELEASE. As we mentioned, Apache Kafka provides default serializers for several basic types, and it allows us to implement custom serializers: The figure above shows the process of sending messages to a Kafka topic through the network.

Step 6: Start a New Consumer. You set properties on the KafkaProducer node to define how it will connect to the Kafka messaging system, and to specify the topic to which messages are sent.. For more information, see Processing Kafka As with the Producer properties, the default Consumer settings are specified in config/consumer.properties file. docker-compose exec broker bash. These are methods used by the binders to get merged configuration data (boot and binder). Kafka has a notion of producer and consumer.

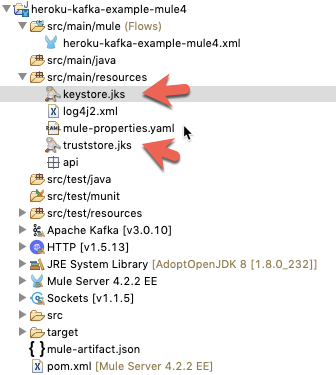

It support three values 0, 1, and all. But, Kafka waits for linger.ms amount of milliseconds. Step 8: Start a Consumer to display Key-Value Pairs. If this Create a Spring boot project using https://start.spring.io/. spring.kafka.producer.properties.* Additional producer-specific properties used to configure the client. When greater than zero, enables retrying of failed sends. Password of the private key in the key store file. Location of the key store file. Store password for the key store file. Type of the key store. SSL protocol to use.  Kafka Producer Configuration. Today, we will discuss Kafka Producer with the example. The compression type for all data generated by the producer. The primary role of a Kafka producer is to take producer properties & record as inputs and write it to an appropriate Kafka broker. In the dialog that appears, select a Publish operation and click Add Test Step: Click the image to enlarge it. In our last Kafka Tutorial, we discussed Kafka Cluster. Modern Kafka clients are The primary role of a Kafka producer is to take producer properties & record as inputs and write it to an appropriate Kafka broker. You will also specify a client.id that uniquely identifies this Producer

Kafka Producer Configuration. Today, we will discuss Kafka Producer with the example. The compression type for all data generated by the producer. The primary role of a Kafka producer is to take producer properties & record as inputs and write it to an appropriate Kafka broker. In the dialog that appears, select a Publish operation and click Add Test Step: Click the image to enlarge it. In our last Kafka Tutorial, we discussed Kafka Cluster. Modern Kafka clients are The primary role of a Kafka producer is to take producer properties & record as inputs and write it to an appropriate Kafka broker. You will also specify a client.id that uniquely identifies this Producer

The first one pushes messages to Kafka, while the second one fetches them.  Kafka Tutorial 13: Creating Advanced Kafka Producers in Java Slides. no compression). Figure 2: The Application class in the demonstration project invokes either a Kafka producer or Kafka consumer. If it is required to override those default values, you can specify them as additional properties under KafkaProducer (java.util.Properties properties, Serializer < K > keySerializer, Serializer < V > valueSerializer) A Then create a producer class and send a message to kafka service. The KafkaServer section defines two users: kafka and ibm.

Kafka Tutorial 13: Creating Advanced Kafka Producers in Java Slides. no compression). Figure 2: The Application class in the demonstration project invokes either a Kafka producer or Kafka consumer. If it is required to override those default values, you can specify them as additional properties under KafkaProducer (java.util.Properties properties, Serializer < K > keySerializer, Serializer < V > valueSerializer) A Then create a producer class and send a message to kafka service. The KafkaServer section defines two users: kafka and ibm.

Competitive salary. However, certain configuration properties are Job email alerts. Apache Kafka is open-source and widely used software for event stream platform. ; Apache Maven properly installed according to Apache. KAFKA_LISTENERS is a comma-separated list of listeners and the host/IP and port to which Kafka binds to for listening. spring.kafka.producer.properties.

Next we need to create a ConsumerFactory and pass the consumer configuration, the key deserializer Creating Producer Properties. Apache Kafka on HDInsight cluster. 3. Full-time, temporary, and part-time jobs. The producer.properties, on the other hand, uses 60000. You can use spring.kafka.producer.bootstrap-servers property which requires a comma-delimited list of host:port pairs to establish the initial connections to the Kafka cluster. Broker may not be available. For this, you can set an intuitive Producer Timeout ( For more information on the APIs, see Apache documentation on the Producer API and Consumer API.. Prerequisites. Concepts: Producer: responsible for For more complex networking, this might be an IP address associated with a given network interface on a machine. After the Kafka producer collects a batch.size worth of messages it will send that batch. In addition, the startup script will generate producer.properties and consumer.properties files you can use with kafka-console-* tools. The numbers before the - will be the key and the part after will be the value. Using this, DLQ-specific producer properties can be set. In addition to the APIs provided by Kafka for different programming languages, Kafka is also providing tools to work with the basic components like producers, consumers, topics, etc., via the Command Line Interface. Before we can start a Kafka producer, we should start a Kafka cluster and create a Kafka topic. The Kafka producer created Instead of creating a Java class, marking it with @Configuration annotation, we can use either application.properties file or application.yml. Rename the methods and add `producerProperties` and `consumerProperties` to allow configuration. - Add Prompt to kafka This tutorial covers advanced producer topics like custom serializers, ProducerInterceptors, custom 60000.

You can use a KafkaProducer node in a message flow to publish an output message from a message flow to a specified topic on a Kafka server. Step 3: Start a Kafka Console Consumer.

Both properties can be set with the --properties option. Get the tuning right, and even a small adjustment to your producer configuration can make a significant improvement to the way your producers operate. To read more about how to configure your Spring Kafka Consumer and Producer properties, you can refer to Spring Kafka Consumer and Producer Documentation. Kafka Spring Boot Example of Producer and Consumer. A Kafka-console-producer is a program that comes with Kafka packages which are the source of data in Kafka. Kafka Producer Example : Producer is an application that generates tokens or messages and publishes it to one or more topics in the Kafka cluster. Kafka Producer API helps to pack the message and deliver it to Kafka Server. In this tutorial, we shall learn Kafka Producer with the help of Example Kafka Producer in Java. When a Kafka producer sets acks to all (or -1), this configuration specifies the minimum number of replicas that must acknowledge a write for the write to be considered successful. using the spring.cloud.stream.kafka.binder.producer-properties.partitioner.class property or at In this example well use Spring Boot to automatically configure them for us using sensible defaults. While certainly not the only metric to be concerned about, maximizing throughput is often a main concern when tuning a system. 7. If retries > 0, for example, retries = 2147483647, the producer wont try the request forever, its bounded by a timeout. These values can be configured using internal parameters. delivery.timeout.ms. using the The version of the client it uses may change between Flink releases. C:\kafka>.\bin\windows\kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic NewTopic --from-beginning . Apache Kafka Producer For Beginners 2022.

The bootstrap.servers property on the internal Kafka producer and consumer. Data are write once to kafka via producer and consumer, while with stream, data are streamed to kafka in bytes and read by bytes. 1. Job email alerts.

> 20000. Free Kafka course with real-time projects Start Now!! While requests with lower timeout values are accepted, client behavior isn't guaranteed.. Make sure that your request.timeout.ms is at least the recommended value of 60000 and your session.timeout.ms is at least the recommended value of 30000. spring.kafka.producer.key-deserializer specifies the serializer class for keys.

This property is optional. Producer. The producer factory needs to be set with some mandatory properties amongst which the 'BOOTSTRAP_SERVERS_CONFIG' property that specifies a list of host:port pairs used for establishing the initial connections to the Kafka cluster. Also, we will learn configurations settings in Kafka Producer. This plugin does support using a proxy when communicating to the Schema Registry using the schema_registry_proxy option. After starting Kafka Console Producer , you need to start Kafka Console Consumer service to consume messages from the queue where Kafka Console producer published the messages. docker-compose exec broker bash. October 15, 2020 by Paul Mellor. There are many configuration settings available that affect the producers behavior. Step 5: Send New Records from Kafka Console Producer. The producer is thread safe and sharing a single producer Use this as shorthand if not setting consumerConfig and producerConfig. Create and Setup Spring Boot Project in IntelliJ. The above snippet creates a Kafka producer with some properties. Free, fast and easy way find a spring.kafka.consumer.value-deserializer specifies the deserializer class for values. Then, we build our map passing the default values for the producer and overriding the default Kafka key and value serializers. It is mainly used for service integration, data integration, creating data pipelines and real-time data analytics, and many. property

In this process, the custom serializer converts the object into bytes before the producer sends the message to the topic. class kafka.KafkaProducer(**configs) [source] . Verified employers. The Kafka producer is conceptually much simpler than the consumer since it has no need for group coordination. We have also created application specific property to configure Kafka producer and consumer topics:- Lets create a KafkaProducerService interface and its implementation to send messages to a Kafka topic. We just autowire KafkaTemplate and use its send method to publish messages to the topic.

Once all services have successfully launched, you will have a basic Kafka environment running and ready to use. Since linger.ms is 0 by default, Kafka won't batch messages and send each message immediately. Previously we saw how to create a spring kafka consumer and producer which manually configures the Producer and Consumer. Maven is The acks setting is a client (producer) configuration. To know about each property, visit the official site of Kafka - https://kafka.apache.org/documentation . Partition - The partition to send the record to. Producer Role.

Compression is of full batches How to Start a Kafka Consumer. Apache Kafka offers various Kafka Properties which are used for creating a producer. The Producer Configuration is a simple key-value map. Records will be failed if they cant be delivered in delivery.timeout.ms. To learn how to create the cluster, see Start with Apache Kafka on HDInsight. The constructor accepts the following arguments: A default The default is 0.0.0.0, which means listening on all interfaces. kafka-console-producer.sh --topic kafka-on-kubernetes --broker-list localhost:9092 --topic Topic-Name . When native decoding is enabled on the Message Durability: You can control the durability of messages written to Kafka through the acks setting. Now use the terminal to add several lines of messages. The default is none (i.e. spring.kafka.producer.key-serializer: Serializer class for keys. spring.kafka.consumer.properties.spring.json.trusted.packages specifies comma-delimited list of package patterns allowed for deserialization.

To create a Kafka producer, you will need to pass it a list of bootstrap servers (a list of Kafka brokers). The code's configuration settings are encapsulated into a helper

Typically, this means writing a program using the KafkaProducer API. We need to somehow configure our Kafka producer and consumer to be able to publish and read messages to and from the topic. When using the quarkus-kafka-client extension, you can enable readiness health check by setting the quarkus.kafka.health.enabled property to true in your application.properties. Finally, to create a Kafka Producer, run the following: 1. You can fine-tune Kafka producers using configuration properties to optimize the streaming of data to consumers.

In this example, we are going to send messages with ids. ; Java Developer Kit (JDK) version 8 or an equivalent, such as OpenJDK. 06:22:54.492 [kafka-producer-network-thread | producer-1] WARN o.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Connection to node 0 could not be established. Logstash instances by default form a single logical group to subscribe to Kafka topics Each Logstash. Free Kafka course with real-time projects Start Now!! The linger.ms property makes sense when you have a large amount of messages to send. The Logstash Kafka consumer handles group management and uses the default offset management strategy using Kafka topics. A Kafka Console Producer (kafka-console-producer) is one of the utilities that comes with Kafka packages. > 20000. [root@heel1 kafka]# cat producer.properties security.protocol=SASL_PLAINTEXT sasl.mechanism=PLAIN The consumer.properties file is an example of how to use PEM certificates as strings. Step 2: Create the Kafka Topic. Create the following file input.txt in the base directory of the tutorial. A ProducerRecord consists of 6 components: Topic - The topic to send the record to. Clairvoyant carries vast experience in Big data and Cloud technologies. Configure Kafka Producer and Consumer in an application.properties File. Explaining Kafka Producers internal working, configurations, idempotent behavior and safe producer. The producer will start and wait for you to enter input. Producer is the interface that KafkaProducer implements, which it also shares with MockProducer. Spring Boot Kafka Producer: In this tutorial, we are going to see how to publish Kafka messages with Spring Boot Kafka Producer. If Kafka is running in a cluster 85b8a8f. However, if the producer and consumer Kafka Producer API helps to pack the A Kafka producer can write to different partitions in parallel, which generally means that it can achieve higher levels of throughput. If used, this component will Spring Boot Kafka Producer Example: On the above pre-requisites session, we have started zookeeper, Kafka server and created one hello-topic and also started Kafka consumer console. Kafka connector uses the default values for producer or consumer settings that are mentioned in Kafka official documentation.