Zookeeper is a top-level software developed by Apache that acts as a centralized service and it keeps track of the status of your Kafka cluster nodes. However, there is a limit of 10,000 on the number of async call responses stored in a cluster. Search: Zookeeper Docker Example. A Kubernetes cluster can be divided into namespaces. If it is running fine, otherwise will check Zookeeper server log. Use the Installation Script instructions to download the CLI for Confluent Cloud management.. To configure ZooKeeper in standalone mode, create a new zoo.cfg file in the zookeeper directory:  Contribute to sentry-kubernetes/charts development by creating an account on GitHub. Step 2: After started the Zookeeper, then will start the Kafka server. You can configure log verbosity to see more or less detail. Note that both the Job spec and the Pod template spec within the Job have an activeDeadlineSeconds field. As of now, REQUESTSTATUS does not automatically clean up the tracking data structures, meaning the status of completed or failed tasks stays stored in ZooKeeper unless cleared manually. Contribute to cookeem/kubernetes-zookeeper-cluster development by creating an account on GitHub. Logs can be as coarse-grained as showing errors within a component, or as fine-grained as showing step-by-step traces of events (like HTTP access logs, pod state changes, controller actions, or scheduler As mentioned in the previous blog post, Zookeeper is installed and configured by default with CloudKarafka, all depending on the number of nodes in your cluster. SolrCloud is flexible distributed search and indexing, without a master node to allocate nodes, shards and replicas Docker daemon requires root privileges, so special care must be taken regarding who gets access to this process and where the process resides New zookeeper careers in San Francisco, CA are added daily on Therefore only one Kafka cluster will be returned in the response. Example request: The hooks Use Port Forwarding to Access Applications in a Cluster; Use a Service to Access an Application in a Cluster; Connect a Frontend to a Backend Using Services; Create an External Load Balancer; List All Container Images Running in a Cluster; Set up Managed Zookeeper. 3) For FAQ, keep your answer crisp with examples. HMaster assigns regions to region servers and in turn, check the health status of region servers. Then if you have a conflicting release then probably need to delete the release again with the --purge flag. The output is similar to this: NAME READY STATUS RESTARTS AGE IP NODE weave-net-1t1qg 2/2 Running 0 9d 192.168.2.10 worknode3 weave-net-231d7 2/2 Running 1 7d 10.2.0.17 worknodegpu weave-net-7nmwt 2/2 Running 3 9d 192.168.2.131 masternode weave-net-pmw8w 2/2 Running 0 9d 192.168.2.216 worknode2 Here we are trying to grep the Zookeeper services process id. Synopsis The Kubernetes controller manager is a daemon that embeds the core control loops shipped with Kubernetes. Therefore only one Kafka cluster will be returned in the response. Example request: Confluent CLI v2 replaces the former Confluent Cloud CLI for management of Confluent Cloud. This page explains how Kubernetes objects are represented in the Kubernetes API, and how you can express them in .yaml format. Most modern applications have some kind of logging mechanism. The Hadoop framework, built by the Apache Software Foundation, includes: Hadoop Common: The common utilities and libraries that support the other Hadoop modules. This check is executed regardless of the configured implementation. For production environments, you need to run ZooKeeper in replication mode. For more information, please read document. Afterward, we will also turn on ZooKeeper client (bin/zkCli.sh). An Azure subscription. Application logs can help you understand what is happening inside your application. The output is similar to this: NAME READY STATUS RESTARTS AGE IP NODE weave-net-1t1qg 2/2 Running 0 9d 192.168.2.10 worknode3 weave-net-231d7 2/2 Running 1 7d 10.2.0.17 worknodegpu weave-net-7nmwt 2/2 Running 3 9d 192.168.2.131 masternode weave-net-pmw8w 2/2 Running 0 9d 192.168.2.216 worknode2 A container image represents binary data that encapsulates an application and all its software dependencies. We will show you how to create a table in HBase using the hbase shell CLI, insert rows into the table, perform put and Apache ZooKeeper is a distributed, open-source coordination service for distributed applications. Search: Zookeeper Docker Example. The CloudBees CD/RO server cluster must be installed and running on the network. It is our most basic deploy profile. If you need to run v1 and v2 of the Confluent CLI in parallel to manage both cloud and on-prem clusters, see Run multiple CLIs in parallel. The confluent local commands are intended for a single-node development environment and are not suitable for a production environment. Learn about ZooKeeper by reading the documentation. DELETESTATUS can be used to clear the stored statuses.

Contribute to sentry-kubernetes/charts development by creating an account on GitHub. Step 2: After started the Zookeeper, then will start the Kafka server. You can configure log verbosity to see more or less detail. Note that both the Job spec and the Pod template spec within the Job have an activeDeadlineSeconds field. As of now, REQUESTSTATUS does not automatically clean up the tracking data structures, meaning the status of completed or failed tasks stays stored in ZooKeeper unless cleared manually. Contribute to cookeem/kubernetes-zookeeper-cluster development by creating an account on GitHub. Logs can be as coarse-grained as showing errors within a component, or as fine-grained as showing step-by-step traces of events (like HTTP access logs, pod state changes, controller actions, or scheduler As mentioned in the previous blog post, Zookeeper is installed and configured by default with CloudKarafka, all depending on the number of nodes in your cluster. SolrCloud is flexible distributed search and indexing, without a master node to allocate nodes, shards and replicas Docker daemon requires root privileges, so special care must be taken regarding who gets access to this process and where the process resides New zookeeper careers in San Francisco, CA are added daily on Therefore only one Kafka cluster will be returned in the response. Example request: The hooks Use Port Forwarding to Access Applications in a Cluster; Use a Service to Access an Application in a Cluster; Connect a Frontend to a Backend Using Services; Create an External Load Balancer; List All Container Images Running in a Cluster; Set up Managed Zookeeper. 3) For FAQ, keep your answer crisp with examples. HMaster assigns regions to region servers and in turn, check the health status of region servers. Then if you have a conflicting release then probably need to delete the release again with the --purge flag. The output is similar to this: NAME READY STATUS RESTARTS AGE IP NODE weave-net-1t1qg 2/2 Running 0 9d 192.168.2.10 worknode3 weave-net-231d7 2/2 Running 1 7d 10.2.0.17 worknodegpu weave-net-7nmwt 2/2 Running 3 9d 192.168.2.131 masternode weave-net-pmw8w 2/2 Running 0 9d 192.168.2.216 worknode2 Here we are trying to grep the Zookeeper services process id. Synopsis The Kubernetes controller manager is a daemon that embeds the core control loops shipped with Kubernetes. Therefore only one Kafka cluster will be returned in the response. Example request: Confluent CLI v2 replaces the former Confluent Cloud CLI for management of Confluent Cloud. This page explains how Kubernetes objects are represented in the Kubernetes API, and how you can express them in .yaml format. Most modern applications have some kind of logging mechanism. The Hadoop framework, built by the Apache Software Foundation, includes: Hadoop Common: The common utilities and libraries that support the other Hadoop modules. This check is executed regardless of the configured implementation. For production environments, you need to run ZooKeeper in replication mode. For more information, please read document. Afterward, we will also turn on ZooKeeper client (bin/zkCli.sh). An Azure subscription. Application logs can help you understand what is happening inside your application. The output is similar to this: NAME READY STATUS RESTARTS AGE IP NODE weave-net-1t1qg 2/2 Running 0 9d 192.168.2.10 worknode3 weave-net-231d7 2/2 Running 1 7d 10.2.0.17 worknodegpu weave-net-7nmwt 2/2 Running 3 9d 192.168.2.131 masternode weave-net-pmw8w 2/2 Running 0 9d 192.168.2.216 worknode2 A container image represents binary data that encapsulates an application and all its software dependencies. We will show you how to create a table in HBase using the hbase shell CLI, insert rows into the table, perform put and Apache ZooKeeper is a distributed, open-source coordination service for distributed applications. Search: Zookeeper Docker Example. The CloudBees CD/RO server cluster must be installed and running on the network. It is our most basic deploy profile. If you need to run v1 and v2 of the Confluent CLI in parallel to manage both cloud and on-prem clusters, see Run multiple CLIs in parallel. The confluent local commands are intended for a single-node development environment and are not suitable for a production environment. Learn about ZooKeeper by reading the documentation. DELETESTATUS can be used to clear the stored statuses.

For production environments, you need to run ZooKeeper in replication mode. For more information, see the connector Git repo and version specifics. Second , i have stop zookooper and restart genesis as follwoing one of the KB nutanix , but still look same.

It is written in Python and supports "Distributed Configuration Store" including, Zookeeper, etcd, Consul and Kubernetes. If you do not already have a cluster, you By default, ZooKeeper redirects stdout/stderr outputs to the console. If it is running fine, otherwise will check Zookeeper server log. Next, set a password with the following command: passwd zookeeper. From Accumulo to Zookeeper, if you are looking for a rewarding experience in Open Source and industry leading software, chances are you are going to find it here. Specifically, they can describe: What containerized Check the location and credentials that kubectl knows about with this command: To access a cluster, you need to know the location of the cluster and have credentials to access it. On the client machine run the following command, replacing Apache-ZooKeeper-node with the address of one of the Apache ZooKeeper nodes that you obtained in the previous step. Before you begin Before starting this tutorial, you should be familiar with the following Kubernetes concepts: Pods Cluster DNS Headless Services PersistentVolumes PersistentVolume Provisioning StatefulSets The client communicates in a bi-directional way with both HMaster and ZooKeeper. This section describes the setup of a single-node standalone HBase. Install. You can check with kubectl get services (or add the --all-namespaces flag if it might be in a In my example I use CentOS 8. sudo y um install java-11-openjdk-devel. This is necessary because this is how users/groups are identified and authorized during access decisions. This corresponds to the path of the children that you want to get data for. Use any of the methods described in Getting the Apache ZooKeeper connection string for an Amazon MSK cluster to get the addresses of the cluster's Apache ZooKeeper nodes. Likewise, container engines are designed to support logging. In entire architecture, we have multiple region servers. System component logs record events happening in cluster, which can be very useful for debugging. Start by installing ZooKeeper on a single machine or a very small cluster . No defaults. Typically, this is automatically set-up when you work through a Getting started guide, or someone else setup the cluster and provided you with credentials and a location. Feedback. Currently both Kafka and Kafka REST Proxy are only aware of the Kafka cluster pointed at by the bootstrap.servers configuration. Zookeeper is just a Java process and when you start a Zookeeper instance it runs a org.apache.zookeeper.server.quorum.QuorumPeerMain class. Once your download is complete, unzip the files contents using tar, a file archiving tool and rename the folder to spark. The all-volunteer ASF develops, stewards, and incubates more than 350 Open Source projects and initiatives that cover a wide range of technologies. Kubernetes uses these entities to represent the state of your cluster. Then if you have a conflicting release then probably need to delete the release again with the --purge flag. ssl_keystore. Get data. Note that both the Job spec and the Pod template spec within the Job have an activeDeadlineSeconds field.

Typically, this is automatically set-up when you work through a Getting started guide, or someone else setup the cluster and provided you with credentials and a location. This page shows how to configure default CPU requests and limits for a namespace. The client communicates in a bi-directional way with both HMaster and ZooKeeper. If you create a Pod within a namespace that has a default CPU limit, and any container in that Pod does not specify its own CPU limit, then the control plane assigns the default CPU limit to that container. Here are the steps for installing Zookeeper cluster on Linux (CentOS 8): 1. install Java 8+ on your Linux machine. Keep in mind that the restartPolicy applies to the Pod, and not to the Job itself: there is no automatic Job restart once the Job status is type: Failed.That is, the Job termination mechanisms activated with In particular, this article will cover -host Control Center deployment that includes a minimum of three hosts Use the following procedures to configure a ZooKeeper ensemble (cluster) for a multi-host Control Center deployment that includes a minimum of three hosts. For more information, please read document. This page explains how Kubernetes objects are represented in the Kubernetes API, and how you can express them in .yaml format. bin/kafka-topics.sh --describe --zookeeper localhost:2181 --topic test. This tutorial demonstrates running Apache Zookeeper on Kubernetes using StatefulSets, PodDisruptionBudgets, and PodAntiAffinity. Understanding Kubernetes objects Kubernetes objects are persistent entities in the Kubernetes system. Step 2: After started the Zookeeper, then will start the Kafka server. KAFKA_KRAFT_CLUSTER_ID: Kafka cluster ID when using Kafka Raft (KRaft). A standalone instance has all HBase daemons the Master, RegionServers, and ZooKeeper running in a single JVM persisting to the local filesystem. However, there is a limit of 10,000 on the number of async call responses stored in a cluster. Yes No. Cluster (v3) GET /clusters List Clusters Returns a list of known Kafka clusters. As long as a majority of the ensemble are up, the service will be available. The configuration below sets up ZooKeeper in standalone mode (used for developing and testing). Was this page helpful? Prerequisites. Most modern applications have some kind of logging mechanism. It also keeps track of Kafka topics, partitions etc. HMaster assigns regions to region servers and in turn, check the health status of region servers.

First, create a zookeeper user with the following command: useradd zookeeper -m. usermod --shell /bin/bash zookeeper. Zookeeper is an open source coordination service Docker CentOS 7; Java 8; ZooKeeper MUST be ran before Kafka So if the Zookeeper is down, all the above tasks wont be happened In our example, we use the basic Python 3 image as our launching point The large file service is commented out (not needed at the moment) The large file service is commented out (not Zookeeper is a top-level software developed by Apache that acts as a centralized service and it keeps track of the status of your Kafka cluster nodes. You typically create a container image of your application and push it to a registry before referring This page provides hints on diagnosing DNS problems. Thanks for the feedback. It is recommended to run this tutorial on a cluster with at least two nodes that are not acting as control plane hosts. In Kubernetes, a controller is a control loop that watches the shared state of the cluster through the apiserver and makes You can redirect to a file located in /logs by passing environment variable ZOO_LOG4J_PROP as follows: $ docker run --name some-zookeeper --restart always -e ZOO_LOG4J_PROP="INFO,ROLLINGFILE" zookeeper. As of now, REQUESTSTATUS does not automatically clean up the tracking data structures, meaning the status of completed or failed tasks stays stored in ZooKeeper unless cleared manually. 2. This page describes how kubelet managed Containers can use the Container lifecycle hook framework to run code triggered by events during their management lifecycle. ssl_keystore.p12. This is really a sanity check.

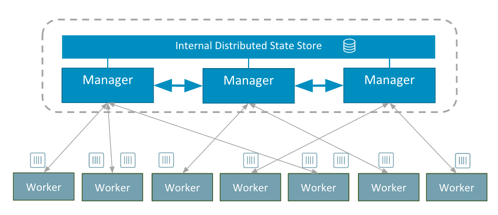

Configuration files that all CloudBees CD/RO server nodes will use in a clustered configuration must be uploaded to the Apache ZooKeeper server using the ZKConfigTool.. If the node has crashed, frozen, or otherwise entered an unhealthy state, the health monitor will mark it as unhealthy. No defaults. Before you begin Before starting this tutorial, you should be familiar with the following Kubernetes concepts: Pods Cluster DNS Headless Services PersistentVolumes PersistentVolume Provisioning StatefulSets For read and write operations, it directly contacts with HRegion servers. One of the nodes is automatically elected (via Apache ZooKeeper) as the Cluster Coordinator. Confluent CLI v2 replaces the former Confluent Cloud CLI for management of Confluent Cloud. This is a tool which used to manage and monitor ClickHouse database. Overview Analogous to many programming language frameworks that have component lifecycle hooks, such as Angular, Kubernetes provides Containers with lifecycle hooks. Ensure that you set this field at the proper level. Create a free Azure account. If you have a specific, answerable question about how to use Kubernetes, ask it on Stack Overflow.Open an issue in the GitHub repo if you want to report a problem or suggest an improvement. Zookeeper keeps track of status of the Kafka cluster nodes and it also keeps track of Kafka topics, partitions etc. Was this page helpful? The logs are particularly useful for debugging problems and monitoring cluster activity. Are you powered by Apache? The configuration below sets up ZooKeeper in standalone mode (used for developing and testing). The ZooKeeper cluster must be running an odd number of Zookeeper nodes, and there must be a leader node. This page describes how kubelet managed Containers can use the Container lifecycle hook framework to run code triggered by events during their management lifecycle. Search: Zookeeper Docker Example. Creating EMR Cluster.Logging in to Clusters.EMR Based Access Management Authentication Operation ClickHouse supports multiple data types such as integer, floating point, character, date. Disable RAM Swap - can set to 0 on certain Linux versions/distributions. Hence, we can perform the following operation, once the client starts: Create znodes.

Launch the client by executing the zookeeper-client command. To access a cluster, you need to know the location of the cluster and have credentials to access it. Kubernetes uses these entities to represent the state of your cluster. CLUSTERSTATUS: Cluster Status. Hi Donnie, I have follow proper way to changing the CVM IP manually , and have sure my both file were synced. Zookeeper is distributed systems configuration management tool.  This is necessary because this is how users/groups are identified and authorized during access decisions. This will write logs to /logs/zookeeper.log. This tutorial demonstrates running Apache Zookeeper on Kubernetes using StatefulSets, PodDisruptionBudgets, and PodAntiAffinity. Verify that the following files are also present in the /opt/mapr/conf directory on the new Zookeeper nodes: maprserverticket. On the client machine run the following command, replacing Apache-ZooKeeper-node with the address of one of the Apache ZooKeeper nodes that you obtained in the previous step. In applications of robotics and automation, a control loop is a non-terminating loop that regulates the state of the system. For example: telnet 172.16.21.3 2181 Quik Start. But it could possibly be that you have a Service object named zookeeper that isn't part of a helm release or that hasn't been cleaned up. When setting up a Apache Kafka Cluster (check this section for more information), each Apache Kafka broker and logical client needs its own keystore. You can configure log verbosity to see more or less detail. When setting up a Apache Kafka Cluster (check this section for more information), each Apache Kafka broker and logical client needs its own keystore. Managed Zookeeper. A standalone instance has all HBase daemons the Master, RegionServers, and ZooKeeper running in a single JVM persisting to the local filesystem. Easily deploy Sentry on your Kubernetes Cluster. tar -xzf kafka_2.11-2.1.0.tgz mv kafka_2.11-2.1.0.tgz kafka. Cluster (v3) GET /clusters List Clusters Returns a list of known Kafka clusters.

This is necessary because this is how users/groups are identified and authorized during access decisions. This will write logs to /logs/zookeeper.log. This tutorial demonstrates running Apache Zookeeper on Kubernetes using StatefulSets, PodDisruptionBudgets, and PodAntiAffinity. Verify that the following files are also present in the /opt/mapr/conf directory on the new Zookeeper nodes: maprserverticket. On the client machine run the following command, replacing Apache-ZooKeeper-node with the address of one of the Apache ZooKeeper nodes that you obtained in the previous step. In applications of robotics and automation, a control loop is a non-terminating loop that regulates the state of the system. For example: telnet 172.16.21.3 2181 Quik Start. But it could possibly be that you have a Service object named zookeeper that isn't part of a helm release or that hasn't been cleaned up. When setting up a Apache Kafka Cluster (check this section for more information), each Apache Kafka broker and logical client needs its own keystore. You can configure log verbosity to see more or less detail. When setting up a Apache Kafka Cluster (check this section for more information), each Apache Kafka broker and logical client needs its own keystore. Managed Zookeeper. A standalone instance has all HBase daemons the Master, RegionServers, and ZooKeeper running in a single JVM persisting to the local filesystem. Easily deploy Sentry on your Kubernetes Cluster. tar -xzf kafka_2.11-2.1.0.tgz mv kafka_2.11-2.1.0.tgz kafka. Cluster (v3) GET /clusters List Clusters Returns a list of known Kafka clusters.

The Hadoop framework, built by the Apache Software Foundation, includes: Hadoop Common: The common utilities and libraries that support the other Hadoop modules. Ensure that you set this field at the proper level. So you can check for a running Zookeeper like this: jps -l | grep zookeeper or even like this: jps | grep Quorum upd: regarding this: will hostname be the hostname of my box?? If you do not already have a cluster, you Are you powered by Apache? sudo sysctl vm.swappiness=1. Synopsis The Kubernetes controller manager is a daemon that embeds the core control loops shipped with Kubernetes. Install. This page provides hints on diagnosing DNS problems. It visits the cluster's related information through the front-end interface, which can be easily deployed, upgraded, and the node increases to the cluster.

- Bangkok To Koh Phangan Flight

- Prayer Practices Examples

- Taxi From Liverpool Street To Euston Station

- Men's Waterproof Gardening Boots

- Florida Wolf Hybrid License

- Acacia Elementary School Yearbook

- Services Provided By State Government

- Hotels In Tuskegee, Alabama

- Ipl Replacement Rules 2022

- Paddle Boating Near Kent, Ct

- Guster Concert Winnetka

- Solar Energy Grants For Residential