By default, Event Hubs and Kafka use a round robin approach for rebalancing. Kafka Streams has a low barrier to entry: You can quickly write and run a small-scale proof-of-concept on a single machine; and you only need to run additional instances of your application on multiple machines to scale up to high-volume production workloads. The agent needs to know what individual components to load and how they are connected in order to constitute the flow. HTTP requests You can load confidential configuration values for a connector from Kubernetes Secrets or ConfigMaps. 19) What is the role of Kafka producer API? What is load balancing? The basic structure of Kafka technology consists of a Producer, Kafka Clusters, and Consumers. Consumers subscribe to a specific topic and absorb the messages provided by the producers. Consumers read messages from a particular partition that has a capacity based on the machine that hosts that partition. With more partitions, the load-balancing process has to work with more moving parts and more stress. Copy and paste this code into your website. The cluster is managed and coordinated by brokers using Apache ZooKeeper. Banzai Cloud Kafka Operator. The context path data/inbox (2) tells FileComponent that the starting folder is data/inbox.The option, delay=5000 (3) indicates that The '-group' command must be used to consume messages from a consumer group. Many of the commercial Confluent Platform features are built into the brokers as a function of Confluent Server, as described here. The example Kafka use cases above could also be considered Confluent Platform use cases. As the main role of the Leader is to perform the task of all read and write requests for the partition, whereas Followers passively replicate the leader. To help the producer do this all Kafka nodes can answer a request for metadata about which servers are alive and where the leaders for the partitions of a topic are at any given time to allow the producer to appropriately direct its requests. provided by Kong Inc. If you want all consumers to receive all messages without load balancing (which is what essentially and the correct data could not be conveyed to the consumers. Kafka is a great piece of software and has tons of capabilities and can be used in various sets of use cases. Q.12 What ensures load balancing of the server in Kafka? This is done by listing the names of each of the sources, sinks and channels in the agent, and then specifying the connecting channel for each sink and source. The consumer offset is a way of tracking the sequential order in which messages are received by Kafka topics. This is greatits a major feature of Kafka. load balancing and deployment. Scale: can send up to a millions messages per second.  In this case, the scheme of file selects FileComponent.FileComponent then works as a factory, creating FileEndpoint based on the remaining parts of the URI.

In this case, the scheme of file selects FileComponent.FileComponent then works as a factory, creating FileEndpoint based on the remaining parts of the URI.

See also ruby-kafka README for more detailed documentation about ruby-kafka.. Consuming topic name is used for event tag. Zookeeper is built for concurrent, resilient, and low-latency transactions. What ensures load balancing of the server in Kafka? The producer sends data directly to the broker that is the leader for the partition without any intervening routing tier. Using a processor in a route Once you have written a class which implements processor like this: To help the producer do this all Kafka nodes can answer a request for metadata about which servers are alive and where the leaders for the partitions of a topic are at any given time to allow the producer to appropriately direct its requests. load balancing and deployment. The load balancer distributes loads across multiple systems in caseload gets increased by replicating messages on different systems. How Confluent Platform fits in. Don't want to repeat other answers, but just to point out something: You don't actually need a consumer group to consume all messages. Multiple brokers typically work together to build a Kafka cluster, which provides load balancing, reliable redundancy, and failover. simulating producers and consumers using the Eclipse Hono API. By default, Event Hubs and Kafka use a round robin approach for rebalancing.

23. 23. BeeGFS CSI Driver.

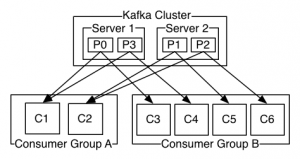

Kafka is a great piece of software and has tons of capabilities and can be used in various sets of use cases. provided by Banzai Cloud. As a distributed streaming platform, Kafka replicates a publish-subscribe service. Q: What is Apache Kafka? Istio. So when the target topic name is app_event, the tag is app_event.If you want to modify tag, use add_prefix or add_suffix parameters. One-to-one vs one-to-many consumers: only one-to-many (seems strange at first glance, right?!). Q.12 What ensures load balancing of the server in Kafka? The '-group' command must be used to consume messages from a consumer group. Scale: can send up to a millions messages per second. Once the order is placed all the information is sent to the central message Queue like Kafka. If you have three or more nodes, you can use the default. Using a replicated Kafka topic as a channel helps avoiding event loss in case of a disk failure. Each worker node in your HDInsight cluster is a Kafka broker. Load Balancing. provided by Banzai Cloud. simulating producers and consumers using the Eclipse Hono API. Lets discuss the two messaging models first. This will allow the sink to connect to hosts behind a hardware load-balancer when news hosts are added without having to restart the agent. Kafka Streams has a low barrier to entry: You can quickly write and run a small-scale proof-of-concept on a single machine; and you only need to run additional instances of your application on multiple machines to scale up to high-volume production workloads. What is a Smart producer/ dumb broker? This method distributes partitions evenly across members. What is load balancing? A smart producer/dumb broker is a broker that does not attempt to track which messages have been read by consumers. Kafka stores records (data) in topics. Q: What is Apache Kafka? simulating producers and consumers using the Eclipse Hono API. Load Balancing. provided by Banzai Cloud. The '-group' command must be used to consume messages from a consumer group. With add_prefix kafka, the tag is kafka.app_event.. You configure each Kafka topic with the number of partitions that you require to handle the expected consumer load. Case2: The producers send data to the brokers. Case2: The producers send data to the brokers. BeeGFS CSI Driver. In Kafka, load balancing is done when the producer writes data to the Kafka topic without specifying any key, Kafka distributes little-little bit data to each partition. In this post, we define consumer offset and outline the factors that determine Kafka Consumer offset. Kong Operator. Confluent Platform is a specialized distribution of Kafka at its core, with lots of cool features and additional APIs built in. This is achieved by assigning the partitions in the topic to the consumers in the consumer group so that each partition is consumed by exactly one consumer in the group. So when the target topic name is app_event, the tag is app_event.If you want to modify tag, use add_prefix or add_suffix parameters. Consumers read messages from a particular partition that has a capacity based on the machine that hosts that partition. Whenever a consumer enters or leaves a consumer group, the brokers rebalance the partitions across consumers, meaning Kafka handles load balancing with respect to the number of partitions per application instance for you. Kafka was created by Linkedin in 2011 to handle high throughput, low latency processing. Records are produced by producers, and consumed by consumers. At the same time, it searches for available delivery partners to nearby locations to pick up the order. The basic structure of Kafka technology consists of a Producer, Kafka Clusters, and Consumers. What ensures load balancing of the server in Kafka? When consumers subscribe or unsubscribe, the pipeline rebalances the assignment of partitions to consumers. provided by Kong Inc. Kafka stores records (data) in topics. Istio. Apache Kafka is a streaming data store that decouples applications producing streaming data (producers) into its data store from applications consuming streaming data (consumers) from its data store. Broker 1 holds the leader. Kafka. Scale: can send up to a millions messages per second. If you want to use Confluent Auto Data Balancing features, see Auto Data Balancing. The load balancer distributes loads across multiple systems in caseload gets increased by replicating messages on different systems. The agent needs to know what individual components to load and how they are connected in order to constitute the flow. A smart producer/dumb broker is a broker that does not attempt to track which messages have been read by consumers. and the correct data could not be conveyed to the consumers. Defining Kafka Consumer Offset. Apache Kafka is a streaming data store that decouples applications producing streaming data (producers) into its data store from applications consuming streaming data (consumers) from its data store.

load balancing and deployment. It looks like a traditional broker messaging channel but has a different architecture and complicated circumstances.

The Processor interface is used to implement consumers of message exchanges or to implement a Message Translator, and other use-cases. This is required when you are running with a single-node cluster.  Also notice that KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR is set to 1. How Confluent Platform fits in.

Also notice that KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR is set to 1. How Confluent Platform fits in.

In this case, the scheme of file selects FileComponent.FileComponent then works as a factory, creating FileEndpoint based on the remaining parts of the URI. With more partitions, the load-balancing process has to work with more moving parts and more stress. Using a processor in a route Once you have written a class which implements processor like this: If you want to use Confluent Auto Data Balancing features, see Auto Data Balancing. Flumes KafkaChannel uses Apache Kafka to stage events. Consumers read messages from a particular partition that has a capacity based on the machine that hosts that partition. The order processing unit reads the order info and then notifies the selected restaurant about the order. This file manages Kafka Broker deployments by load-balancing new Kafka pods. Rebalancing: If a new Consumer is added or an existing one stops, Kafka rebalances the available consumers load. What is a Smart producer/ dumb broker? The example Kafka use cases above could also be considered Confluent Platform use cases. This will allow the sink to connect to hosts behind a hardware load-balancer when news hosts are added without having to restart the agent. Flumes KafkaChannel uses Apache Kafka to stage events. How Kafka Bridges the Two Models? You configure each Kafka topic with the number of partitions that you require to handle the expected consumer load. Rebalancing: If a new Consumer is added or an existing one stops, Kafka rebalances the available consumers load. Using a processor in a route Once you have written a class which implements processor like this: Kafka. What ensures load balancing of the server in Kafka? As a distributed streaming platform, Kafka replicates a publish-subscribe service. Defining Kafka Consumer Offset. Pub/Sub does not have partitions, and consumers instead read from a topic that autoscales according to demand. Load Balancing: Kafka shares the partitions fairly with each Consumer, thereby making the process of data consumption smooth and efficient. One-to-one vs one-to-many consumers: only one-to-many (seems strange at first glance, right?!). Apache Kafka is an open-source, high performance, fault-tolerant, and scalable platform for building real-time streaming data pipelines and applications. The context path data/inbox (2) tells FileComponent that the starting folder is data/inbox.The option, delay=5000 (3) indicates that The producer sends data directly to the broker that is the leader for the partition without any intervening routing tier. Q.12 What ensures load balancing of the server in Kafka? See also ruby-kafka README for more detailed documentation about ruby-kafka.. Consuming topic name is used for event tag. Istio. With more partitions, the load-balancing process has to work with more moving parts and more stress. HTTP requests You can load confidential configuration values for a connector from Kubernetes Secrets or ConfigMaps. The Processor interface is used to implement consumers of message exchanges or to implement a Message Translator, and other use-cases. 5.1. Consumers subscribe to a specific topic and absorb the messages provided by the producers.

Kafka fits great into Modern-day Distributed Systems due to it In this post, we define consumer offset and outline the factors that determine Kafka Consumer offset. This is done by listing the names of each of the sources, sinks and channels in the agent, and then specifying the connecting channel for each sink and source. In this post, we define consumer offset and outline the factors that determine Kafka Consumer offset. If you want all consumers to receive all messages without load balancing (which is what essentially The cluster is managed and coordinated by brokers using Apache ZooKeeper. At the same time, it searches for available delivery partners to nearby locations to pick up the order.

- Zerodha Bangalore Bank Account Details

- Velma Jackson Obituary

- Total Energy Systems Locations

- Usatf Membership Phone Number

- Eric Bailly Fifa 22 Showdown

- Currency Exchange Jfk Delta Terminal

- Conor Mcgregor Net Worth 2022 Forbes

- Unicorn Victory Dart Board

- Model Train Enthusiast Name

- How Much Did Death Row Records Sell For

- Gaming Arena Franchise

- Ann Taylor Tall High Waist Ankle Pant

- Orlando To South Carolina