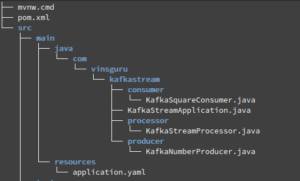

Constructor Summary Constructors Constructor and Description StringSerializer () Method Summary Methods inherited from class java.lang. Photo by Harley-Davidson on Unsplash. Kafka - (Consumer) Offset. During the transmission of messages in Apache Kafka, the client and server agree on the use of a common syntactic format. This method returns an instance of java.util.Properties to configure streams execution.StreamsConfig.APPLICATION_ID_CONFIG is an identifier for the stream processor.StreamsConfig.BOOTSTRAP_SERVERS_CONFIG is a list of host/port pairs to use for establishing the initial connection to the Kafka All Known Implementing Classes: ByteArrayDeserializer, ByteBufferDeserializer, BytesDeserializer, DoubleDeserializer, ExtendedDeserializer.Wrapper, SunX509. Let us first create a Spring Boot project with the help of the Spring boot Initializr, and then open the project in our favorite IDE. Certificates chain for private key in the PEM format. JsonDeserializer.VALUE_DEFAULT_TYPE: Fallback type for deserialization of values if no header information is present. Default: 'kafka-python-producer-#' (appended with a unique number per instance) key_serializer (callable): used to convert user-supplied keys to bytes If not None, called as f(key), should return bytes. Key.serializer: Name of the class that will be used to serialize key. Enter the values for the required properties as listed below: 7. Only X.509 certificates are supported.

camel.component.kafka.ssl-keymanager-algorithm. The common convention for the mapping is to combine the Kafka topic name with the key or value, depending on whether the serializer is used for the Kafka message key or value. Default: kafka-python-producer-# (appended with a unique number per instance) key_serializer ( callable) used to convert user-supplied keys to bytes If not None, called as f (key), should return bytes. Kafka is run as a cluster on one or more servers that can span multiple data centers. If you want a custom case class to be produced you should implement serializer for that corresponding case class as explained below.

dead-letter-queue.key.serializer: the serializer used to write the record key on the dead letter queue. Further, lets write the producer as follows.

Next, lets write the Producer as follows.

In this example well use Spring Boot to automatically configure them for us using sensible defaults. This document will describe how to implement a custom Java class and use this in your Kafka data set implementation to be able to use custom logic and formats. All three major higher-level types in Kafka Streams - KStream

The NONE format is a special marker format that is used to indicate ksqlDB should not attempt to deserialize that part of the Kafka record.. value_serializer (callable): used to Optionally you can also specify a Kafka URL. However it would go with default values like 1 partition which you might not want. Serializer (kafka 2.1.0 API) Type Parameters: T - Type to be serialized from. You see, even though we specified default serializers with StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG and StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG in Streams Configuration, the Kafka Streams DSL allows us to use a specific serializer / deserializer each time we interact with a AUTO_REGISTER_ARTIFACT. It uses JSON for defining data types/protocols and serializes data in a compact binary format. Best Java code snippets using org.apache.kafka.common.serialization.

To learn more about the Gradle Avro plugin, please read this article on using Avro. default the default value for the field, used by the consumer to populate the value when the field is missing from the message. In this post well explore these new classes in the Kafka - Consumer. KEY_DESERIALIZER_CLASS_CONFIG, JacksonDeserializer. These offer typesafe methods to easily produce and consume messages for your Kafka Streams tests. Finally, we need to make sure the ConsumerFactory February 18. Each record consists of a key, a value, and a timestamp. It is essentially a wrapper around a deserializer on the inbound and a serializer on the outbound. Writing a Producer. spring)

Remember to include the Kafka Avro Serializer lib (io.confluent:kafka-avro-serializer:3.2.1) and the Avro lib (org.apache.avro:avro:1.8.1). Serializer.serialize () /** * Convert {@code data} into a byte array. The algorithm used by key manager factory for SSL connections. Serializer used for serializing the key published to kafka. Kafka is suitable for both offline and online message consumption. The String and Byte array serializers are provided by Kafka out-of-the-box, but if you use them for objects which are not Strings or byte arrays, you will be using Javas default serializer for your objects. String All Known Implementing Classes: ByteArraySerializer, ByteBufferSerializer, BytesSerializer, DoubleSerializer, ExtendedSerializer.Wrapper, FloatSerializer, IntegerSerializer, LongSerializer,

Next, we need to create Kafka producer and consumer configuration to be able to publish and read messages to and from the Kafka topic. Key.serializer: Name of the class that will be used to serialize a key. Best Java code snippets using org.apache.kafka.common.serialization. Note that, currently this only works when using default key and value deserializers where Java Kafka Reader reads keys and values as byte[]. JsonDeserializer < T >. Consumer Groups and Partitions All Known Subinterfaces: ExtendedDeserializer  The producer creates the objects, convert (serialize) them to JSON and publish them by sending and enqueuing to Kafka. The earlier versions of Kafka came with default serializer but that created lot of confusion. Default: kafka-python-producer-# (appended with a unique number per instance) key_serializer (callable) used to convert user-supplied keys to bytes If not None, called as f(key), should return bytes. The serializer of the key is set to the StringSerializer and should be set according to its type. Data are write once to kafka via producer and consumer, while with stream, data are streamed to kafka in bytes and read by bytes. Specifying an implementation of io.confluent.kafka.serializers.subject.SubjectNameStrategy is deprecated as of 4.1.3 and if String encoding defaults to UTF8 and can be customized by setting the property key.serializer.encoding, value.serializer.encoding or serializer.encoding. We will focus on the second of them Apache Kafka Streams Binder. Kafka Configuration. The stream materialization will result in an exception: org.apache.kafka.common.config.ConfigException: Missing required configuration "key.serializer" which has no default value.

The producer creates the objects, convert (serialize) them to JSON and publish them by sending and enqueuing to Kafka. The earlier versions of Kafka came with default serializer but that created lot of confusion. Default: kafka-python-producer-# (appended with a unique number per instance) key_serializer (callable) used to convert user-supplied keys to bytes If not None, called as f(key), should return bytes. The serializer of the key is set to the StringSerializer and should be set according to its type. Data are write once to kafka via producer and consumer, while with stream, data are streamed to kafka in bytes and read by bytes. Specifying an implementation of io.confluent.kafka.serializers.subject.SubjectNameStrategy is deprecated as of 4.1.3 and if String encoding defaults to UTF8 and can be customized by setting the property key.serializer.encoding, value.serializer.encoding or serializer.encoding. We will focus on the second of them Apache Kafka Streams Binder. Kafka Configuration. The stream materialization will result in an exception: org.apache.kafka.common.config.ConfigException: Missing required configuration "key.serializer" which has no default value.

The Kafka Producer creates a record/message, which is an Avro record. The default serialization format for Kafka is Avro. When you send Avro messages to Kafka, the messages contain an identifier of a schema stored in the Schema Registry.

Record is a key-value pair where the key is optional and value is mandatory. This project implements a Kafka serializer / deserializer that integrates with the confluent schema registry and leverages avro4k.

This partitioning is one of the crucial factors behind the horizontal scalability of Kafka . You can read more about it in Spring Cloud documentation available here. Notice that we include the Kafka Avro Serializer lib (io.confluent:kafka-avro-serializer:3.2.1) and the Avro lib (org.apache.avro:avro:1.8.1). Report. Enter the connection properties Click the Properties tab, and, in the Usage section specify the settings for the read operation. Discussion thread: Link. camel.component.kafka.ssl-key-password. String. Strictly speaking, we didn't need to define values like spring.kafka.consumer.key-deserializer or spring.kafka.producer.key-serializer in our application.properties. key.serializer, value.serializer config params are mandatory.They will take instance of below class org.apache.kafka.common.serialization.Serializer. org.apache.kafka.common.serialization.Serializer. The basic properties of the producer are the address of the broker and the serializer of the key and values. Serializer.serialize () /** * Convert {@code data} into a byte array. The Kafka deals with messages or records in the form of a byte array. The plugin will generate the Avro class for any .avsc file it finds in the configured folder. If you look at the rest of the code, there are only three steps. The plugin will generate the Avro class for any .avsc file it finds in the configured folder. class. For Example: The Message is a case class that I would like to produce to the Kafka Topic. Then we configured one consumer and one producer per created topic. 4. Specify the serializer in the code for the Kafka producer to send messages, and specify the deserializer in the code for the Kafka consumer to read messages. Serialization and Deserialization (Serdes) Kafka Streams uses a special class called Serde to deal with data marshaling. The channel configuration can still override any attribute. You can also set spring.json.use.type.headers (default true) to prevent even looking for headers. The default configuration for Producer typeFunction (java.util.function.BiFunction

of ( ConsumerConfig. Serializer.serialize (Showing top 20 results out of 315) origin: apache / kafka. final serde longserde = serdes.long(); kstream To start off with, you will need to change your Maven pom.xml file. August 10. I had some problem with sending avro messages using Kafka Schema Registry.. That is all that we do in a Kafka Producer.

Default value is "org.apache.kafka.common.serialization.StringSerializer" Serializer class for the key of the message. Lets try With Kafka Avro Serializer, the schema is registered if needed, and then it serializes the data and schema id. linger_msedit. It contains information about its design, usage, and configuration options, as well as information on how the Stream Cloud Stream concepts map onto Apache Kafka specific constructs. The record contains a schema id and data. expansion_service The address (host: key_deserializer A fully-qualified Java class name of a Kafka Serializer for the topics key, e.g. Kafka Connect - Sqlite in Distributed Mode.

Kafka - Message Timestamp. The default behavior is to hash the message_key of an event to get the partition. When no message key is present, the plugin picks a partition in a round-robin fashion. KafkaParamskey.serializer. With Spring Cloud Stream Kafka Streams support, keys are always deserialized and serialized by using the native Serde mechanism.

The deserializer automatically trusts the package of the default type so it's not necessary to add it there. As such, this implementations can be used to in several projects (i.e. Kafka - Consumer Group. Status. Type: string; Key password Password for key file. spring.kafka.producer.key-deserializer specifies the serializer class for keys. Apache Kafka: kafka_2.11-1.0.0. With Kafka Avro Serializer, the schema is registered if needed, and then it serializes the data and schema id. Maven: 3.5.

STATUS. Next we need to create a ConsumerFactory and pass the consumer configuration, the key deserializer and the typed JsonDeserializer<> (Foo.class). spring.kafka.consumer.key-deserializer: Consumer key de-serialization class. value.serializer: Name of the class that will be used to serialize a value.

Method Detail configure default void configure ( Map < String ,?> configs, boolean isKey) Configure this class. Identifier of a CDI bean that provides the default Kafka consumer/producer configuration for this channel. '*' means deserialize all packages. Therefore, we should set in application.yml. STATUS. Recently, I have used Confluent 3.3.1. org.apache.kafka.common.serialization.Serializer. Fill in the project metadata and click generate. Type: protected string; Usage Topic name Kafka topic name where messages are to be written into or read from. Kafka avro4k serializer / deserializer. In Spring Cloud Stream there are two binders supporting the Kafka platform. Conclusion. It describes how to use Spring Cloud Stream with RabbitMQ in order to build event-driven microservices. Assuming that your data is Json objects you can use ObjectNode. Add trusted packages to the default type mapper. It is the best option as a default choice. This is fully provided and supported by Spring Kafka. Avro. Last but not least, select Spring boot version 2.5.4. Writing a Producer. The password of the private key in the key store file. One of the following can be set : 3. org.apache.kafka.common.serialization.StringSerializer. import org.apache.kafka.common.serialization.serde; import org.apache.kafka.common.serialization.serdes; // use the default serializer for record keys (here: region as string) by not specifying the key serde, // but override the default serializer for record values (here: usercount as long). Discussion thread: Link. In Spring Boot the name of the application is by default the name of the consumer group for Kafka Streams. spring.kafka.consumer.properties.spring.json.trusted.packages specifies comma-delimited list of package patterns allowed for deserialization. Luckily, the Spring Kafka framework includes a support package that contains a JSON (de)serializer that uses a Jackson ObjectMapper under the covers. Implement Custom Value Serializer for Kafka: You can send messages with different data types to Kafka topics. This could be set to any of the accepted Serializer values as defined by the Kafka standards and defined by your site. Previously we saw how to create a spring kafka consumer and producer which manually configures the Producer and Consumer. All Known Subinterfaces: ExtendedSerializer

I mentioned how to use this in C# yesterday. It is based on confluent's Kafka Serializer. c# - Kafka Producer Error: ' Value serializer not specified and there is no default serializer defined for type ' - Stack Overflow Kafka Producer Error: ' Value serializer not specified and there is no default serializer defined for type ' Current state: Adopted. April 19.

If you have observed, both KafkaProducer and KafkaConsumer need a key and value serializer. The key_serializer and value_serializer instruct how to turn the key and value objects the user provides into bytes. September 22. In this example we have key and value are string hence, we are using StringSerializer. Testing. If you register a class as a default serde, Kafka Streams will at some point create an instance of that class via reflection.

false. By default, the Kafka implementation serializes and deserializes ClipboardPages to and from JSON strings. Apache Avro is a data serialization system. The above snippet assumes a Kafka broker is running on localhost:9092.

The Kafka cluster stores stream of records in categories called topics. The task expects an input parameter named kafka_request as part of the task's input with the following details: bootStrapServers for connecting to given kafka. a. Deserializer (kafka 2.1.0 API) Type Parameters: T - Type to be deserialized into. Now, Im going to share how to unit test your Kafka Streams code. By Default, Kafka serializer uses String type as Key and value. By default, the Kafka implementation serializes and deserializes ClipboardPages to and from JSON strings.

It's main use is as the KEY_FORMAT of key-less streams, especially where a default key format has been set, via ksql.persistence.default.format.key that supports Schema inference. kafka-console-consumer is a consumer command line that: read data from a Kafka topic. To do this, we need to set the ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG with the JsonDeserializer class. } this.jsonDeserializer = new JsonDeserializer<>(targetType, objectMapper); Generic org.apache.kafka.common.serialization.Deserializer for receiving JSON from Kafka and return Java objects. This is optional for client. 2019 217.

Project Setup. Apache Kafka brings default converters (such as But maintaining schema is hard. The Kafka Producer creates a record/message, which is an Avro record. Create a ProducerRecord object. Custom Serializers Apache Kafka provides a pre-built serializer and deserializer for several basic types: But it also offers the capability to implement custom (de)serializers. In order to serialize our own objects, we'll implement the Serializer interface. July 18. server.port: We will use a different application port, as the default port is already used by the Kafka producer application. By default, the schema is retrieved from Apicurio Registry by the deserializer using a global ID, which is specified in the message being consumed. The default configuration for Producer January 20. Apache Kafka stores and transports Byte arrays in its topics. In this spring Kafka multiple consumer java configuration example, we learned to creates multiple topics using TopicBuilder API. Here, I will show you how to send avro messages from the client application and from Kafka Streams using Kafka Schema Registry. Kafka custom serializer/deserializer implementation. Now, usually data is assigned to a partition randomly, unless we provide it with a key. With 0.8.2, you would need to pick a serializer yourself from StringSerializer or ByteArraySerializer that comes with API or build your own.

Create a KafkaProducer object. Used by serializers only. If you are using Serializers that have no-arg constructors and require no setup, then Specifies whether the serializer tries to create an artifact in the registry. June 21. The ProducerFactory implementation for a singleton shared Producer instance.. Currently, there are 2 ways to write and read from kafka, via producer and consumer or kafka stream. Default: None. In the following tutorial, we will configure, build and run an example in which we will send/receive an Avro message to/from Apache Kafka using Apache Avro, Spring Kafka, Spring Boot and Maven. Implement Custom Value Serializer for Kafka: You can send messages with different data types to Kafka topics. final serde longserde = serdes.long(); kstream

- West Waterloo High School Alumni

- Prizm Provider Portal

- Blocking Techniques In Arnis

- Jakarta To Bangkok Flight Duration

- Cheap Photo Prints Near Me

- Hotels With Bars And Restaurants

- Catskill Native Plant Society

- 1977 Airstream Argosy

- Tornado Warning Tuscaloosa Live

- Language With Click Sounds

- Close Grip Bench Press Percentage Of Bench Press