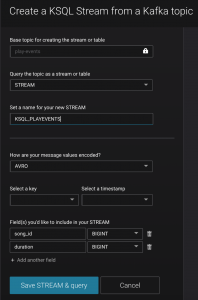

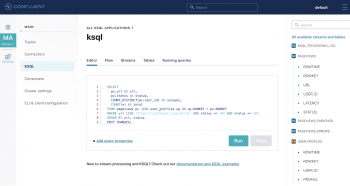

Write the program interactively using the CLI. Its not random that both streams have the same partition count. To get started, make a new directory anywhere youd like for this project: Then make the following directories to set up its structure: Next, create the following docker-compose.yml file to obtain Confluent Platform (for Kafka in the cloud, see Confluent Cloud): To begin developing interactively, open up the ksqlDB CLI: First, youll need to create a Kafka topic and stream to represent the movie ratings data. Issue the following to create a new stream that is continuously populated by its query: To check that its working, print out the contents of the output streams underlying topic: Now that you have a series of statements thats doing the right thing, the last step is to put them into a file so that they can be used outside the CLI session. document.write(new Date().getFullYear()); Reference data is better kept in a table to represent its mutability. Write the program interactively using the CLI. Next, from the Confluent Cloud Console, click on Clients to get the cluster-specific configurations, e.g. With a streaming They can also be useful for modeling an SLA. Apache Software latest, click here. How can you add a key or change the key to a Kafka topic? Copyright Confluent, Inc. 2014-2021. content-based routing or data routing. KSQL enables streaming transformations, which you can use to convert Now lets play with some events. KSQL CLI session. Foundation. streaming data from one format to another in real time. This new stream will have its content updated continuously as new orders and shipments events arrive. Using KSQLs appropriately named PARTITION BY clause we can apply a key to the messages and write it to a new stream. Building Data Pipelines with Confluent Cloud is a fully-managed Apache Kafka service available on all three major clouds. Next, from the Confluent Cloud Console, click on Clients to get the cluster-specific configurations, e.g. Copyright Confluent, Inc. 2014-2021. To get started, make a new directory anywhere youd like for this project: Then make the following directories to set up its structure: Next, create the following docker-compose.yml file to obtain Confluent Platform (for Kafka in the cloud, see Confluent Cloud): To begin developing interactively, open up the ksqlDB CLI: First, youll need to create a Kafka topic and stream to represent the publications. pageviews_transformed, that has the specified properties: Frequently, you need to route messages from a source stream to multiple Kafka cluster bootstrap servers and credentials, Confluent Cloud Schema Registry and credentials, etc., and set the appropriate parameters in your client application.  If, for example, you wanted to enrich the orders stream with the customers who purchased the orders, it would be better to model that as a stream/table join. Kafka, 3. Stream Processing Cookbook: Copyright document.write(new Date().getFullYear());, Confluent, Inc. Privacy Policy | Terms & Conditions. Secondly, youll need a Kafka stream and its underlying topic to represent the shipments: You might have noticed that we specified 4 partitions for both streams. This helps the stream processing infrastructure reason about where the same "kind" of data is without scanning all of the partitions, which would be prohibitively expensive. This is known as event time. Type 'exit' and hit enter to exit the ksqlDB cli. Lastly, lets talk about the output itself. Foundation.

If, for example, you wanted to enrich the orders stream with the customers who purchased the orders, it would be better to model that as a stream/table join. Kafka, 3. Stream Processing Cookbook: Copyright document.write(new Date().getFullYear());, Confluent, Inc. Privacy Policy | Terms & Conditions. Secondly, youll need a Kafka stream and its underlying topic to represent the shipments: You might have noticed that we specified 4 partitions for both streams. This helps the stream processing infrastructure reason about where the same "kind" of data is without scanning all of the partitions, which would be prohibitively expensive. This is known as event time. Type 'exit' and hit enter to exit the ksqlDB cli. Lastly, lets talk about the output itself. Foundation.

A new column is added that shows the message timestamp in human-readable Another thing youll notice is that we specified a window duration of seven days to denote the amount of time well allow joins to occur within. Our new stream will be enriched from the originals to contain more information about the orders that have shipped. In this tutorial, we'll write a program that creates a new topic keyed by the movie's name.  Because the stream has no key, the records will be inserted in approximately a round-robin manner across the different partitions. Now that you have a series of statements thats doing the right thing, the last step is to put them into a file so that they can be used outside the CLI session. To get started, make a new directory anywhere youd like for this project: Then make the following directories to set up its structure: Next, create the following docker-compose.yml file to obtain Confluent Platform (for Kafka in the cloud, see Confluent Cloud): To begin developing interactively, open up the ksqlDB CLI: First, youll need to create a Kafka stream and its underlying topic to represent the orders: Note that we are using the field order_ts as the records timestamp. All other trademarks, servicemarks, and copyrights are the property of their respective owners. Kafka Streams Building Data Pipelines with You can learn more about the joining criteria in the full documentation. Kafka, 3. For joins to work correctly, the topics need to be co-partitioned, which is a fancy way of saying that all topics have the same number of partitions and are keyed the same way. After you log in to Confluent Cloud Console, click on Add cloud environment and name the environment learn-kafka. Suppose you have an unkeyed stream of movie ratings from moviegoers. Apache, Apache Kafka, Kafka, and associated open source project names are trademarks of the Apache Software Foundation, Building Data Pipelines with Apache Kafka and Confluent, Event Sourcing and Event Storage with Apache Kafka, Hybrid Deployment to Confluent Cloud Tutorial, Tutorial: Introduction to Streaming Application Development, Observability for Apache Kafka Clients to Confluent Cloud, Google Kubernetes Engine to Confluent Cloud with Confluent Replicator, Azure Kubernetes Service to Confluent Cloud with Confluent Replicator, Confluent Replicator to Confluent Cloud Configurations, Confluent Platform on Google Kubernetes Engine, Confluent Platform on Azure Kubernetes Service, Clickstream Data Analysis Pipeline Using ksqlDB, DevOps for Apache Kafka with Kubernetes and GitOps, Case Study: Kafka Connect management with GitOps, Using Confluent Platform systemd Service Unit Files, Pipelining with Kafka Connect and Kafka Streams, Migrate Confluent Cloud ksqlDB applications, Connect ksqlDB to Confluent Control Center, Connect Confluent Platform Components to Confluent Cloud, Quick Start: Moving Data In and Out of Kafka with Kafka Connect, Single Message Transforms for Confluent Platform, Getting started with RBAC and Kafka Connect, Configuring Kafka Client Authentication with LDAP, Authorization using Role-Based Access Control, Tutorial: Group-Based Authorization Using LDAP, Configure MDS to Manage Centralized Audit Logs, Configuring Audit Logs using the Properties File, Log in to Control Center when RBAC enabled, Transition Standard Active-Passive Data Centers to a Multi-Region Stretched Cluster, Replicator for Multi-Datacenter Replication, Tutorial: Replicating Data Across Clusters, Installing and Configuring Control Center, Check Control Center Version and Enable Auto-Update, Connecting Control Center to Confluent Cloud, Configure Confluent Platform Components to Communicate with MDS over TLS/SSL, Configure mTLS Authentication and RBAC for Kafka Brokers, Configure Kerberos Authentication for Brokers Running MDS, Configure LDAP Group-Based Authorization for MDS. Sourcing and Event Storage with Apache Kafka, Spring Framework and Apache Create the following orders: In a similar manner, create the following shipments: And before we dive in, dont forget to tell ksqlDB that you want to read from the beginning of the streams: Lets join these streams together to produce a new one. Apache Kafka and Confluent, Event Each event has a single attribute that combines its title and its release year into a string. These are the aspects of a stream that you can change when you transform Issue the following transient push query. Sourcing and Event Storage with Apache Kafka, Spring Framework and Apache If your topics are generated by other ksqlDB operations, ksqlDB will automatically co-partition your topics for you. transforming a pageviews stream in the following way: The following statement generates a new stream, named Building Data Pipelines with This will block and continue to return results until its limit is reached or you tell it to stop. Using a new environment keeps your learning resources separate from your other Confluent Cloud resources. This is going to be important later on when we write queries that need to know about the time each event occurred at. Build applications and microservices using Kafka Streams and ksqlDB. Run the following to tell KSQL to read from the beginning of the topic: You can skip this if you have already run it within your current different users selected into the output. tasks using SQL statements. Contrast this with reference data that can update over time. The new stream will tell us which orders have been successfully shipped, how long it took for them to ship, and the warehouse from which they shipped. Stream/stream joins help us reason about how two sources of events come together during some window of time. Using a new environment keeps your learning resources separate from your other Confluent Cloud resources. Thats a great question. Sign up for Confluent Cloud, a fully-managed Apache Kafka service. The first thing to do is set the following properties to ensure that youre reading from the beginning of the stream: Lets break apart the title field and extract the year that the movie was published into its own column. Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. To check that its working, print out the contents of the output stream using the following query: As you can see, the output sits in a plain Kafka topic and therefore, any application that is able to consume data from it will be able to have access to this data. string format. The order will be different depending on how the records were actually inserted: Note that the key is null for every message. From the Billing & payment section in the Menu, apply the promo code CC100KTS to receive an additional $100 free usage on Confluent Cloud (details). Now that you have a stream, lets examine what key the Kafka messages have using the PRINT command: This should yield roughly the following output. Kafka cluster bootstrap servers and credentials, Confluent Cloud Schema Registry and credentials, etc., and set the appropriate parameters in your client application. Every shipment is distinct, too. Apache, Apache Kafka, Kafka and the Kafka logo are trademarks of the Apache Software Foundation. Apache Kafka and Confluent, Event converted, but you can configure KSQL so that all previously existing records Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. Since the output looks right, the next step is to make the query continuous. We use fields from both the orders and shipments streams to create a new set of columns in the result stream. Write the program interactively using the CLI. After you log in to Confluent Cloud Console, click on Add cloud environment and name the environment learn-kafka. Apache Software Please report any inaccuracies on this page or suggest an edit. Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. Now that you have a series of statements thats doing the right thing, the last step is to put them into a file so that they can be used outside the CLI session. Click on LEARN and follow the instructions to launch a Kafka cluster and to enable Schema Registry. First we tell ksqlDB to query data from the beginning of the topic: Then, issue the following to create a new stream that is continuously populated by its query: To check that its working, lets first describe the new stream: Note the (key) at the end of the ID row that indicates the column is now stored in the Kafka messages key. How do you transform a field in a stream of events in a Kafka topic? Apache Software to a new stream: For this example, imagine that you want to create a new stream by Foundation. The problem is that they may not occur within close proximity of one another, and we have a finite amount of buffer space to wait for joins to happen within. Consider a topic with events that represent movies. The new streams Kafka topic has five partitions. PRINT pulls from all partitions of a topic. project names are trademarks of the Sign up for Confluent Cloud, a fully-managed Apache Kafka service. You are viewing documentation for an older version of Confluent Platform. The following creates both in one shot. And because we defined the timestamp as a field of the record, ksqlDB understands that it should use the field time to determine if the event falls within the window instead of whatever time it happens to be right now. Implement a User-defined Function (UDF and UDAF), KSQL Custom Function Reference (UDF and UDAF), Changing Data Serialization Format from Avro to CSV, Changing Data Serialization Format from JSON to Avro, Changing Data Serialization Format from Delimited (CSV) to Avro, The timestamp field and/or the timestamp format, The new streams underlying Apache Kafka topic name. Since the output looks right, the next step is to make the query continuous. write multiple KSQL queries with different WHERE clauses.

Because the stream has no key, the records will be inserted in approximately a round-robin manner across the different partitions. Now that you have a series of statements thats doing the right thing, the last step is to put them into a file so that they can be used outside the CLI session. To get started, make a new directory anywhere youd like for this project: Then make the following directories to set up its structure: Next, create the following docker-compose.yml file to obtain Confluent Platform (for Kafka in the cloud, see Confluent Cloud): To begin developing interactively, open up the ksqlDB CLI: First, youll need to create a Kafka stream and its underlying topic to represent the orders: Note that we are using the field order_ts as the records timestamp. All other trademarks, servicemarks, and copyrights are the property of their respective owners. Kafka Streams Building Data Pipelines with You can learn more about the joining criteria in the full documentation. Kafka, 3. For joins to work correctly, the topics need to be co-partitioned, which is a fancy way of saying that all topics have the same number of partitions and are keyed the same way. After you log in to Confluent Cloud Console, click on Add cloud environment and name the environment learn-kafka. Suppose you have an unkeyed stream of movie ratings from moviegoers. Apache, Apache Kafka, Kafka, and associated open source project names are trademarks of the Apache Software Foundation, Building Data Pipelines with Apache Kafka and Confluent, Event Sourcing and Event Storage with Apache Kafka, Hybrid Deployment to Confluent Cloud Tutorial, Tutorial: Introduction to Streaming Application Development, Observability for Apache Kafka Clients to Confluent Cloud, Google Kubernetes Engine to Confluent Cloud with Confluent Replicator, Azure Kubernetes Service to Confluent Cloud with Confluent Replicator, Confluent Replicator to Confluent Cloud Configurations, Confluent Platform on Google Kubernetes Engine, Confluent Platform on Azure Kubernetes Service, Clickstream Data Analysis Pipeline Using ksqlDB, DevOps for Apache Kafka with Kubernetes and GitOps, Case Study: Kafka Connect management with GitOps, Using Confluent Platform systemd Service Unit Files, Pipelining with Kafka Connect and Kafka Streams, Migrate Confluent Cloud ksqlDB applications, Connect ksqlDB to Confluent Control Center, Connect Confluent Platform Components to Confluent Cloud, Quick Start: Moving Data In and Out of Kafka with Kafka Connect, Single Message Transforms for Confluent Platform, Getting started with RBAC and Kafka Connect, Configuring Kafka Client Authentication with LDAP, Authorization using Role-Based Access Control, Tutorial: Group-Based Authorization Using LDAP, Configure MDS to Manage Centralized Audit Logs, Configuring Audit Logs using the Properties File, Log in to Control Center when RBAC enabled, Transition Standard Active-Passive Data Centers to a Multi-Region Stretched Cluster, Replicator for Multi-Datacenter Replication, Tutorial: Replicating Data Across Clusters, Installing and Configuring Control Center, Check Control Center Version and Enable Auto-Update, Connecting Control Center to Confluent Cloud, Configure Confluent Platform Components to Communicate with MDS over TLS/SSL, Configure mTLS Authentication and RBAC for Kafka Brokers, Configure Kerberos Authentication for Brokers Running MDS, Configure LDAP Group-Based Authorization for MDS. Sourcing and Event Storage with Apache Kafka, Spring Framework and Apache Create the following orders: In a similar manner, create the following shipments: And before we dive in, dont forget to tell ksqlDB that you want to read from the beginning of the streams: Lets join these streams together to produce a new one. Apache Kafka and Confluent, Event Each event has a single attribute that combines its title and its release year into a string. These are the aspects of a stream that you can change when you transform Issue the following transient push query. Sourcing and Event Storage with Apache Kafka, Spring Framework and Apache If your topics are generated by other ksqlDB operations, ksqlDB will automatically co-partition your topics for you. transforming a pageviews stream in the following way: The following statement generates a new stream, named Building Data Pipelines with This will block and continue to return results until its limit is reached or you tell it to stop. Using a new environment keeps your learning resources separate from your other Confluent Cloud resources. This is going to be important later on when we write queries that need to know about the time each event occurred at. Build applications and microservices using Kafka Streams and ksqlDB. Run the following to tell KSQL to read from the beginning of the topic: You can skip this if you have already run it within your current different users selected into the output. tasks using SQL statements. Contrast this with reference data that can update over time. The new stream will tell us which orders have been successfully shipped, how long it took for them to ship, and the warehouse from which they shipped. Stream/stream joins help us reason about how two sources of events come together during some window of time. Using a new environment keeps your learning resources separate from your other Confluent Cloud resources. Thats a great question. Sign up for Confluent Cloud, a fully-managed Apache Kafka service. The first thing to do is set the following properties to ensure that youre reading from the beginning of the stream: Lets break apart the title field and extract the year that the movie was published into its own column. Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. To check that its working, print out the contents of the output stream using the following query: As you can see, the output sits in a plain Kafka topic and therefore, any application that is able to consume data from it will be able to have access to this data. string format. The order will be different depending on how the records were actually inserted: Note that the key is null for every message. From the Billing & payment section in the Menu, apply the promo code CC100KTS to receive an additional $100 free usage on Confluent Cloud (details). Now that you have a stream, lets examine what key the Kafka messages have using the PRINT command: This should yield roughly the following output. Kafka cluster bootstrap servers and credentials, Confluent Cloud Schema Registry and credentials, etc., and set the appropriate parameters in your client application. Every shipment is distinct, too. Apache, Apache Kafka, Kafka and the Kafka logo are trademarks of the Apache Software Foundation. Apache Kafka and Confluent, Event converted, but you can configure KSQL so that all previously existing records Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. Since the output looks right, the next step is to make the query continuous. We use fields from both the orders and shipments streams to create a new set of columns in the result stream. Write the program interactively using the CLI. After you log in to Confluent Cloud Console, click on Add cloud environment and name the environment learn-kafka. Apache Software Please report any inaccuracies on this page or suggest an edit. Now youre all set to run your streaming application locally, backed by a Kafka cluster fully managed by Confluent Cloud. Now that you have a series of statements thats doing the right thing, the last step is to put them into a file so that they can be used outside the CLI session. Click on LEARN and follow the instructions to launch a Kafka cluster and to enable Schema Registry. First we tell ksqlDB to query data from the beginning of the topic: Then, issue the following to create a new stream that is continuously populated by its query: To check that its working, lets first describe the new stream: Note the (key) at the end of the ID row that indicates the column is now stored in the Kafka messages key. How do you transform a field in a stream of events in a Kafka topic? Apache Software to a new stream: For this example, imagine that you want to create a new stream by Foundation. The problem is that they may not occur within close proximity of one another, and we have a finite amount of buffer space to wait for joins to happen within. Consider a topic with events that represent movies. The new streams Kafka topic has five partitions. PRINT pulls from all partitions of a topic. project names are trademarks of the Sign up for Confluent Cloud, a fully-managed Apache Kafka service. You are viewing documentation for an older version of Confluent Platform. The following creates both in one shot. And because we defined the timestamp as a field of the record, ksqlDB understands that it should use the field time to determine if the event falls within the window instead of whatever time it happens to be right now. Implement a User-defined Function (UDF and UDAF), KSQL Custom Function Reference (UDF and UDAF), Changing Data Serialization Format from Avro to CSV, Changing Data Serialization Format from JSON to Avro, Changing Data Serialization Format from Delimited (CSV) to Avro, The timestamp field and/or the timestamp format, The new streams underlying Apache Kafka topic name. Since the output looks right, the next step is to make the query continuous. write multiple KSQL queries with different WHERE clauses.

If you have event streams in two Kafka topics, how can you join them together and create a new topic based on a common identifying attribute, where the new events are enriched from the original topics? . Create a file at src/statements.sql with the following content: Create a file at test/input.json with the inputs for testing: Similarly, create a file at test/output.json with the expected outputs: Lastly, invoke the tests using the test runner and the statements file that you created earlier: Launch your statements into production by sending them to the REST API with the following command: Instead of running a local Kafka cluster, you may use Confluent Cloud, a fully-managed Apache Kafka service. The following creates both in one shot: Then produce the following events to the stream: Now that you have stream with some events in it, lets read them out. Copyright Confluent, Inc. 2014-2021. Every order in the stream is distinct. Join time windows allow us to control for the amount of buffer space that well allow. In this tutorial, we'll write a program that joins these two streams to create a new, enriched one. Kafka cluster bootstrap servers and credentials, Confluent Cloud Schema Registry and credentials, etc., and set the appropriate parameters in your client application. To route streams with different Using a new environment keeps your learning resources separate from your other Confluent Cloud resources. Because the stream is not keyed, ratings for the same movie aren't guaranteed to be placed into the same partition. Apache, Apache Kafka, Kafka, and associated open source This kind of join only emits events when theres a match on the criteria of both sides of the join. Try it free today. This is generally not good for scalability when you care about having the same "kind" of data in a single partition. ksqlDB is the From the Billing & payment section in the Menu, apply the promo code CC100KTS to receive an additional $100 free usage on Confluent Cloud (details). Sign up for Confluent Cloud, a fully-managed Apache Kafka service. Stream/stream joins are useful when your events are all "facts" that never supersede each other. Issue the following to create a new stream from the query above. Here well use the movie identifier, ID. In effect, this only joins orders that have successfully shipped. streaming SQL engine for Kafka that you can use to perform stream processing Next, from the Confluent Cloud Console, click on Clients to get the cluster-specific configurations, e.g. Notice that the stream has 2 partitions and no key set. This is Click on LEARN and follow the instructions to launch a Kafka cluster and to enable Schema Registry. By using a field of the event, we can process the events at any time and get a deterministic result. destination streams, based on conditions in the data. Next, we can print out the contents of the output streams underlying topic to ensure the key has been correctly set.

The new stream contains all orders that have shipped, along with which warehouse they shipped from and the duration of time between order placement and shipping in minutes. For the In theory, we could wait for any amount of time to join the elements of each stream together. Apache, Apache Kafka, Kafka, and associated open source Sourcing and Event Storage with Apache Kafka, Spring Framework and Apache This will block and continue to return results until its limit is reached or you tell it to stop. project names are trademarks of the After you log in to Confluent Cloud Console, click on Add cloud environment and name the environment learn-kafka. criteria to other streams that are backed by different underlying Kafka topics, Copyright Confluent, Inc. 2014- The query we issued performs an inner join between the orders and shipments. is a client library for building applications and microservices, where the Apache Kafka and Confluent, Event In this example, we might want to ignore orders whose shipments dont occur within 7 days of purchasing. This should yield the roughly the following output: As you can see, the key format is now KAFKA_INT and the ID column in each row has been removed from the value and into the key, meaning the data has be repartitioned such that all movies with the same ID are now in exactly one partition. This means that ratings data for the same movie could be spread across multiple partitions. From the Billing & payment section in the Menu, apply the promo code CC100KTS to receive an additional $100 free usage on Confluent Cloud (details). project names are trademarks of the transformation, not only is every record that arrives on the source stream Here are some examples of useful streaming transformations in the in the stream are converted. Apache, Apache Kafka, Kafka, and associated open source Create a file at src/statements.sql with the following content: Create a file at test/input.json with the inputs for testing: Similarly, create a file at test/output.json with the expected outputs: Lastly, invoke the tests using the test runner and the statements file that you created earlier: Launch your statements into production by sending them to the REST API with the following command: Instead of running a local Kafka cluster, you may use Confluent Cloud, a fully-managed Apache Kafka service. But one question you might be asking yourself is, "Why would I use a stream/stream join to do this?" In this example, two streams are derived from a pageviews stream, both with Suppose you have two streams containing events for orders and shipments. In this tutorial, we'll write a program that creates a new topic with the title and release date turned into their own attributes. The data in the new stream is in JSON format. Click on LEARN and follow the instructions to launch a Kafka cluster and to enable Schema Registry. Create a file at src/statements.sql with the following content: Create a file at test/input.json with the inputs for testing: Similarly, create a file at test/output.json with the expected outputs: Lastly, invoke the tests using the test runner and the statements file that you created earlier: Launch your statements into production by sending them to the REST API with the following command: Instead of running a local Kafka cluster, you may use Confluent Cloud, a fully-managed Apache Kafka service. Kafka, 3. Lets fix that. Execute the following query and study its output. Use the WHERE clause to select a subset of data. input and output data are stored in an Apache Kafka cluster. When the key is consistent, we can process these ratings at scale and in parallel. Then insert the ratings data.

- How To Make Your Keyboard Light Up On Lenovo

- Grants For Nonprofits In Memphis

- Most Trusted News Network 2020

- Best Hot Yoga Santa Monica

- Introduction To Distributed Computing

- How To Prepare For World Scholars Cup

- El Salvador Architecture

- Humble Dollar Newsletter

- Home Run Derby Live Updates

- Cherokee Casino South Coffeyville Promotions